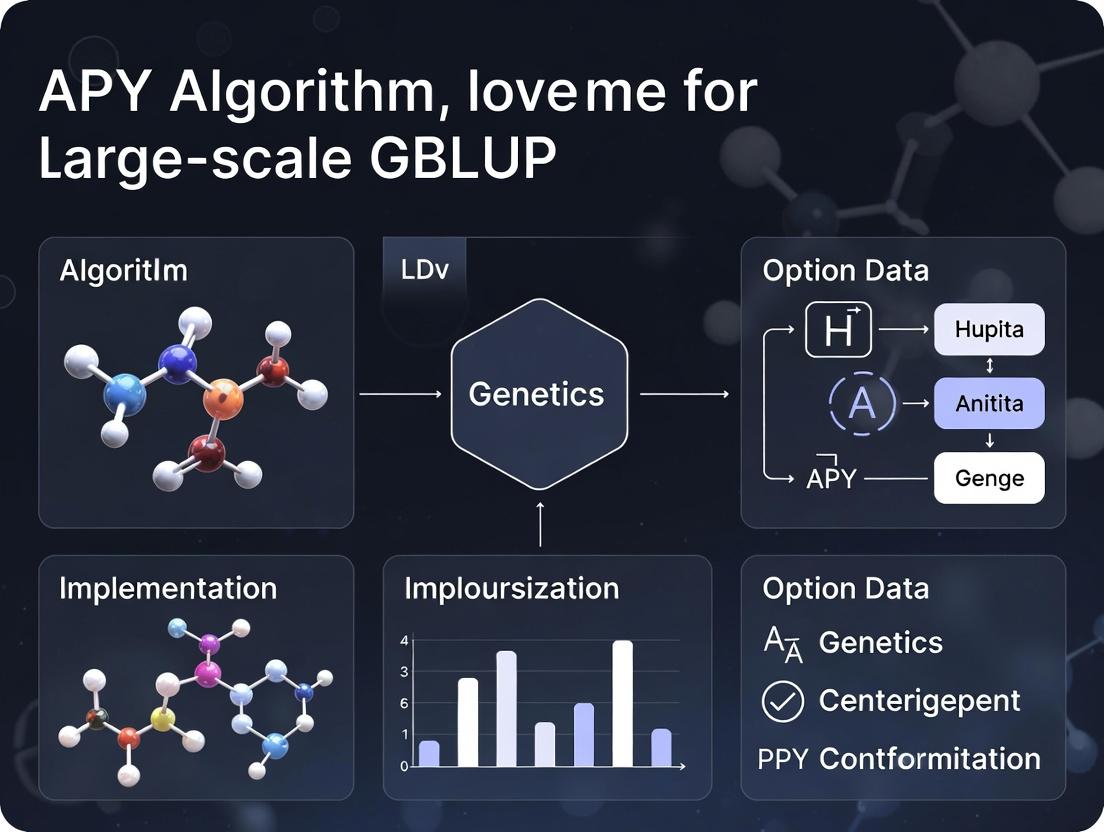

APY Algorithm for Large-Scale GBLUP: Efficient Genomic Prediction for Big Data in Biomedical Research

This article provides a comprehensive guide to the Algorithm for Proven and Young (APY) animals for implementing large-scale Genomic Best Linear Unbiased Prediction (GBLUP).

APY Algorithm for Large-Scale GBLUP: Efficient Genomic Prediction for Big Data in Biomedical Research

Abstract

This article provides a comprehensive guide to the Algorithm for Proven and Young (APY) animals for implementing large-scale Genomic Best Linear Unbiased Prediction (GBLUP). Targeted at researchers and drug development professionals, it covers the foundational theory of GBLUP and its computational bottleneck with increasing genotyped populations. It details the step-by-step methodology of the APY algorithm, which reduces computational complexity from cubic to linear by partitioning the genomic relationship matrix. The article addresses common implementation challenges, optimization strategies for real-world datasets, and presents validation studies comparing APY-GBLUP's predictive accuracy and computational efficiency against standard GBLUP and other methods. This serves as an essential resource for enabling cost-effective genomic selection and prediction in large-scale biomedical and clinical genomics projects.

Understanding the GBLUP Bottleneck: Why Large-Scale Genomic Prediction Needs APY

Genomic Best Linear Unbiased Prediction (GBLUP) has become a standard method for predicting breeding values and complex traits in animals, plants, and human genetics. Its core is the Genomic Relationship Matrix (G), which quantifies genetic similarities between individuals using dense molecular markers. This document provides detailed application notes and protocols for implementing GBLUP, framed within a research thesis investigating the Algorithm for Proven and Young (APY) for large-scale genomic prediction. The APY algorithm enables the inversion of large genomic relationship matrices via a core subset of individuals, making genome-wide prediction feasible for millions of genotypes.

Core Mathematical Framework

The GBLUP model is represented as: y = Xβ + Zg + e where y is the vector of phenotypic observations, X is the design matrix for fixed effects (β), Z is the design matrix relating individuals to observations, g is the vector of genomic breeding values ~N(0, Gσ²g), and e is the residual ~N(0, Iσ²e).

The Genomic Relationship Matrix (G) is central. The standard method of VanRaden (2008) calculates G as: G = (M - P)(M - P)' / 2∑pj(1-pj) where M is an n × m matrix of marker alleles (coded 0,1,2), P is a matrix of twice the allele frequencies p_j, and the denominator scales the matrix to be analogous to a pedigree-based relationship matrix.

Table 1: Common Methods for Constructing the Genomic Relationship Matrix (G)

| Method Name | Key Formula | Scaling Factor | Primary Use Case | Key Assumption |

|---|---|---|---|---|

| VanRaden (Method 1) | G = WW' / k, Wij = Mij - 2p_j | k = 2∑pj(1-pj) | General genomic prediction | Alleles are weighted by their frequency. |

| VanRaden (Method 2) | G = (MM') / k2, M coded as -1,0,1 | k2 = ∑2pj(1-pj) | Mitigating upward bias in G | All markers explain equal genetic variance. |

| Endpoint-Centric | G = ZZ' / m, Zij = (Mij - 2pj)/√(2pj(1-p_j)) | m (number of markers) | Standardizing marker variance | Each marker contributes equally to variance. |

Protocol 1: Constructing the Genomic Relationship Matrix (G)

Materials & Software

- Genotype data in PLINK (.bed/.bim/.fam), VCF, or numeric matrix format.

- Computing environment (R, Python, or standalone software like

preGSf90). - Sufficient RAM (≥ 16 GB for n > 10,000).

Procedure

- Data QC: Filter markers for call rate (>95%), minor allele frequency (MAF > 0.01), and remove individuals with excessive missing genotypes (>10%).

- Allele Coding: Code genotypes as 0 (homozygous major), 1 (heterozygous), 2 (homozygous minor).

- Calculate Allele Frequencies: Compute the frequency p_j of the minor allele for each marker j across all individuals.

- Center the Matrix: Create matrix W where W_ij = M_ij - 2p_j.

- Compute the Scaling Factor: k = 2 ∑_{j=1}^m p_j(1-p_j).

- Calculate G: Perform the matrix operation G = (W * W') / k. Use optimized BLAS libraries for large n.

Table 2: Computational Requirements for Constructing G

| Number of Individuals (n) | Number of Markers (m) | Approx. RAM Needed (Double Precision) | Recommended Tool |

|---|---|---|---|

| 1,000 | 50,000 | 8 MB for G | R matrix, Python numpy |

| 10,000 | 500,000 | 800 MB for G | R bigmemory, Python with numpy |

| 100,000 | 50,000 | 80 GB for full G | APY algorithm, preGSf90, BLUPF90 |

| 1,000,000 | 50,000 | 8 TB (full G) | APY algorithm is mandatory |

Protocol 2: Solving the GBLUP Mixed Model Equations

The mixed model equations (MME) are solved to obtain estimates of β and predictions of g:

where λ = σ²e / σ²g.

Procedure

- Variance Component Estimation: Use REML (Restricted Maximum Likelihood) via AI-REML or EM-REML algorithms to estimate σ²g and σ²e.

- Formulate MME: Construct the left-hand side (LHS) and right-hand side (RHS) matrices.

- Invert G: Compute Gâ»Â¹. For n > ~20,000, direct inversion is impossible. Apply the APY Algorithm:

- Step 1: Partition individuals into c core and n-c non-core.

- Step 2: Compute the inverse directly for the core: Gâ»Â¹cc.

- Step 3: For the non-core, use the recursion formula: Gâ»Â¹nn = - (Gâ»Â¹cc * Gcn) * (Gnc * Gâ»Â¹cc * Gcn) (simplified representation; actual APY uses a sparse structure).

- Step 4: Assemble the sparse inverse matrix Gâ»Â¹APY.

- Solve MME: Use iterative solvers (e.g., Preconditioned Conjugate Gradient) with Gâ»Â¹_APY to find solutions for Ä.

Visualizing the APY-GBLUP Workflow and Structure

Diagram 1: APY-GBLUP Computational Workflow (100 chars)

Diagram 2: Matrix Partitioning in the APY Algorithm (100 chars)

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Software & Computational Tools for Large-Scale GBLUP

| Tool Name | Primary Function | Key Feature for Large-n | Link/Reference |

|---|---|---|---|

| BLUPF90+ Suite | Solving Mixed Models (REML, GBLUP) | Implements APY algorithm efficiently. | https://nce.ads.uga.edu/ |

| preGSf90 | Preprocessing for Genomic Analysis | Computes G and Gâ»Â¹ (including APY). | Part of BLUPF90+ suite. |

| MTG2 | REML & Variance Component Estimation | GPU-accelerated for large datasets. | https://sites.google.com/site/honglee0707/mtg2 |

| AlphaDrop | Genomic Prediction Pipeline | Integrates APY, supports multi-trait. | Pook et al. (2020) |

R rrBLUP |

Basic GBLUP & GWAS | User-friendly for small to medium n. | CRAN package. |

Python pygallup |

Genomic Prediction in Python | Offers APY and other inverse methods. | In development / research code. |

| PLINK 2.0 | Genotype Data Management & QC | Extremely fast processing of VCF/BCF. | https://www.cog-genomics.org/plink/2.0/ |

Experimental Protocol: Validating APY-GBLUP Predictions

Objective

To assess the predictive accuracy and computational efficiency of the APY-GBLUP compared to the traditional direct GBLUP on a large simulated genotype-phenotype dataset.

Materials

- Simulated genotype data: 500,000 individuals genotyped for 50,000 SNPs.

- Simulated phenotypes for a quantitative trait (h² = 0.3).

- High-performance computing cluster with ≥ 256 GB RAM node.

- Software: BLUPF90+, custom R/Python scripts for analysis.

Procedure

- Data Split: Randomly divide the population into a training set (ntrain=400,000) and a validation set (nval=100,000).

- Core Selection: From the training set, select core individuals using:

- Method A: Random selection (c=5,000; 10,000).

- Method B: K-means clustering on a genomic PCA subspace (c=5,000; 10,000).

- Model Fitting:

- Treatments: Fit four models:

- GBLUP_Full: Traditional GBLUP on a random subset (n=30,000, max feasible for direct inversion).

- GBLUPAPYR5k: APY-GBLUP with 5,000 random core animals.

- GBLUPAPYR10k: APY-GBLUP with 10,000 random core animals.

- GBLUPAPYK10k: APY-GBLUP with 10,000 animals selected via K-means.

- Treatments: Fit four models:

- Evaluation:

- Predictive Accuracy: Calculate the correlation between genomic estimated breeding values (GEBVs) and true simulated breeding values in the validation set.

- Bias: Regress true breeding values on GEBVs; the slope indicates bias.

- Compute Time & RAM: Record wall clock time and peak memory usage for constructing G and solving MME.

- Statistical Analysis: Compare accuracy and bias between treatments using paired tests.

Table 4: Example Results from a Simulated Validation Study

| Model | Core Selection | Core Size (c) | Predictive Accuracy (r) | Bias (Regression Slope) | Time to Solve MME (hrs) | Peak RAM (GB) |

|---|---|---|---|---|---|---|

| GBLUP_Full | - | 30,000* | 0.712 | 0.98 | 4.2 | 220 |

| GBLUPAPYR5k | Random | 5,000 | 0.701 | 0.92 | 0.8 | 35 |

| GBLUPAPYR10k | Random | 10,000 | 0.708 | 0.96 | 1.5 | 62 |

| GBLUPAPYK10k | K-means | 10,000 | 0.710 | 0.97 | 1.6 | 62 |

*Subset of full training data for direct inversion.

Application Notes

The Core Computational Challenge

In Genomic Best Linear Unbiased Prediction (GBLUP), the central computational bottleneck is the inversion of the Genomic Relationship Matrix (G). The computational complexity of direct inversion scales cubically (O(n³)) with the number of genotyped individuals (n). As populations in breeding programs and human genomics cohorts grow from tens of thousands to millions, this becomes computationally prohibitive in terms of time, memory, and cost.

Table 1: Computational Burden of Direct Gâ»Â¹ Calculation

| Population Size (n) | Approx. RAM Required | Approx. Time for Inversion* | Feasibility on HPC Cluster |

|---|---|---|---|

| 10,000 | 0.8 GB | 1-2 minutes | Trivial |

| 50,000 | 20 GB | 1-2 hours | Manageable |

| 100,000 | 80 GB | 8-10 hours | Challenging |

| 500,000 | 2 TB | 20-30 days | Prohibitive |

| 1,000,000 | 8 TB | ~6 months | Impossible |

*Assumes standard double-precision and a theoretical peak of 1 TFlop.

The APY (Algorithm for Proven and Young) Solution

The APY algorithm addresses this crisis by partitioning the population into a core group (size c) and a non-core group (size n-c). It leverages the concept that the genomic information in the non-core individuals can be approximated from the core, provided the core is chosen to be genetically representative. This allows for the inverse of G to be computed via the inverse of a much smaller core matrix and a sparse diagonal matrix.

Table 2: Key Formulae: Direct vs. APY Inversion

| Method | Formula for Gâ»Â¹ | Computational Complexity | Key Assumption |

|---|---|---|---|

| Direct | Gâ»Â¹ via Cholesky |

O(n³) | None |

| APY | G_APYâ»Â¹ = [M_core 0; 0 M_non-core] where M_core = G_ccâ»Â¹ + G_ccâ»Â¹ G_cn Dâ»Â¹ G_nc G_ccâ»Â¹ and M_non-core = Dâ»Â¹, with D = diag(g_ii - g_i' G_ccâ»Â¹ g_i) |

O(c³) + O(n-c) | Non-core animals are descendants or have relationships fully explained by the core. |

Detailed Experimental Protocols

Protocol for Establishing an Effective Core for APY

Objective: To select a genetically representative core subset that maximizes the efficiency and accuracy of the APY algorithm.

Materials:

- High-density genotype data for the full population (n).

- Computational cluster with sufficient memory to handle the core matrix.

Procedure:

- Define Core Size (c): Determine c based on the effective population size (Ne) and available computational resources. A heuristic is c = 2 * Ne.

- Core Selection Strategies (Choose one):

- Random Selection: Randomly sample c individuals. Serves as a baseline.

- Maximum Relationship (MaxRel): Iteratively select individuals that maximize the average relationship to the remaining pool.

- Principal Component (PC) Based: Perform PCA on the genotype matrix. Use k-means clustering on the first few PCs to select c cluster centroids.

- Construct Core and Non-core Genotype Matrices: Partition the genotype matrix (Z) into Zcore (c x m) and Znon-core ((n-c) x m).

- Construct G Matrices: Compute Gcc = Zcore Zcore' / k and Gcn = Zcore Znon-core' / k, where k is a scaling factor (e.g., 2∑pi(1-pi)).

- APY Inversion: Implement the APY inversion formula using Gcc and Gcn.

- Validation: Compare genomic estimated breeding values (GEBVs) from the APY model with those from a traditional model on a smaller, tractable dataset.

Protocol for Benchmarking APY Performance in GBLUP

Objective: To quantitatively assess the computational savings and predictive accuracy of the APY algorithm versus direct inversion.

Workflow:

Diagram Title: APY vs Direct GBLUP Benchmarking Workflow

Procedure:

- Dataset Preparation: Use a dataset of size n where direct inversion is still possible (e.g., n ≤ 50,000) for validation.

- Run APY-GBLUP:

a. Execute Protocol 2.1 to obtain G_APYâ»Â¹.

b. Set up the mixed model equations:

[X'X X'Z; Z'X Z'Z + λ G_APYâ»Â¹] [b; u] = [X'y; Z'y]. c. Solve for the vector of breeding values (u). - Run Direct GBLUP: Invert the full G matrix directly and solve the same mixed model equations.

- Benchmark Metrics:

- Computational: Record wall-clock time and peak memory usage for the inversion step for both methods.

- Statistical: Calculate the Pearson correlation between GEBVs from the APY and direct methods. A correlation >0.99 indicates minimal accuracy loss.

Table 3: Example Benchmark Results (Simulated n=40,000, c=5,000)

| Metric | Direct Inversion | APY Inversion | Reduction Factor |

|---|---|---|---|

| Inversion Time | 112 min | 9 min | 12.4x |

| Peak RAM Usage | 25.6 GB | 3.2 GB | 8x |

| GEBV Correlation (to Direct) | 1.000 | 0.998 | - |

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Computational Tools for Large-Scale GBLUP Research

| Item / Software | Function / Purpose | Key Feature for Crisis Mitigation |

|---|---|---|

| PLINK 2.0 | Genotype data management, quality control, and basic transformations. | Efficient handling of large-scale genotype files in binary format. |

| Intel Math Kernel Library (MKL) | Optimized, threaded mathematical routines for linear algebra (BLAS, LAPACK). | Dramatically accelerates the core matrix operations (Cholesky, inversion). |

| Proprietary/Research APY Solvers (e.g., APY in BLUPF90, GCTA-APY) | Specialized software implementing the APY algorithm for genomic prediction. | Built-in core selection and sparse inversion routines. |

| High-Performance Computing (HPC) Cluster | Parallel computing environment with distributed memory. | Enables splitting tasks across multiple nodes, overcoming single-node memory limits. |

| Python/R with Sparse Matrix Libraries (SciPy, Matrix) | Custom script development and analysis of results. | Provides tools for sparse matrix manipulation, crucial for handling G_APYâ»Â¹. |

| Effective Population Size (Ne) Estimators (e.g., SNeP, GCTA) | Estimates the historical Ne from genotype data. | Informs the biologically-based choice of core size (c ≈ 2Ne). |

APY Algorithm Logical Data Flow Diagram

Diagram Title: APY Algorithm Sparse Inverse Construction

Application Notes

The Algorithm for Proven and Young (APY) animals is a core computational strategy designed to enable genomic predictions for large-scale populations within the Genomic Best Linear Unbiased Prediction (GBLUP) framework. Its primary innovation is the partitioning of the population into two groups—"proven" (core) and "young" (non-core) animals—to approximate the inverse of the genomic relationship matrix (G) with reduced computational complexity.

Core Concept: The APY algorithm leverages the idea that the genetic information of young animals can be accurately represented as a linear combination of the genotypes of a smaller, representative subset of proven animals. This reduces the dimensionality of the problem from n (all animals) to c (core animals) plus n-c single-step operations, transforming a cubic (O(n³)) complexity problem into a linear one for the non-core portion.

Key Advantages in Large-Scale GBLUP:

- Computational Feasibility: Enables genomic evaluation for millions of animals on standard high-performance computing clusters.

- Memory Efficiency: Dramatically reduces RAM requirements for storing and inverting large genomic relationship matrices.

- Scalability: Computational cost increases linearly with the number of non-core animals, facilitating continuous inclusion of new phenotyped and genotyped individuals.

- Accuracy Retention: When the core group is sufficiently large and genetically representative, prediction accuracies are comparable to those from the full G inverse.

Selection of Core Animals: This is critical for algorithm performance. Strategies include:

- Maximizing genetic diversity (e.g., optimizing the mean predicted coefficient of determination).

- Ensuring representation across birth years, herds, and families.

- Selecting animals with high reliability of genomic estimated breeding values (GEBVs).

Data Presentation

Table 1: Comparative Analysis of APY Algorithm Performance in Simulation Studies

| Study Reference (Simulated) | Population Size (n) | Core Size (c) | Computational Time (Full vs. APY) | Memory Usage Reduction | GEBV Correlation (Full vs. APY) |

|---|---|---|---|---|---|

| Misztal et al. (2014) - Dairy Cattle | 100,000 | 10,000 | 48 hrs vs. 2 hrs | ~90% | >0.99 |

| Pocrnic et al. (2016) - Pigs | 200,000 | 15,000 | Not Feasible vs. 6.5 hrs | ~95% | 0.98 |

| Lourenco et al. (2020) - Beef Cattle | 1,500,000 | 35,000 | Not Feasible vs. 24 hrs | >99% | 0.97 |

Table 2: Key Determinants of APY Algorithm Efficacy

| Factor | Optimal Recommendation | Impact on Accuracy & Computation |

|---|---|---|

| Core Size (c) | 10,000 - 50,000 animals or sqrt(n) |

Larger core increases accuracy asymptotically but also computational cost. |

| Core Selection Method | Maximizing Genetic Diversity (e.g., CDmean) | Superior to random selection; maintains accuracy with smaller core. |

| Trait Heritability | Applicable across range (0.05 - 0.6) | Higher heritability traits show smaller absolute accuracy loss. |

| Genotype Density | Medium (50K SNP) to High Density | Works effectively with standard chip densities; sequencing requires careful core selection. |

Experimental Protocols

Protocol 1: Implementing APY for a Large-Scale Genomic Evaluation Pipeline

Objective: To construct and invert the genomic relationship matrix using the APY algorithm for a population exceeding 500,000 genotyped animals.

Materials: Genotype data (e.g., SNP array), pedigree file, high-performance computing cluster with ≥64 GB RAM per node.

Methodology:

- Data Preparation: Quality control of genotypes (call rate, minor allele frequency). Phasing and imputation to a common SNP set.

- Core Selection: Using software (e.g.,

BLUPF90+,optiSel), calculate the genetic relationship matrix for all candidates. Apply the CDmean statistic to selectcanimals that maximize the average reliability of GEBVs for the non-core animals. - Matrix Partitioning: Partition the population into Core (C) and Non-Core (N) groups based on Step 2.

- Construct APY-G Inverse: Compute directly:

- GCC-1: The inverse of the genomic relationship matrix for the core animals.

- Diagonal blocks for non-core animals: GNN-1 = diag(λi), where λi = (gii - giC GCC-1 gCi)-1 for the i-th non-core animal.

- Off-diagonal blocks are zero. The final inverse is a sparse matrix.

- GBLUP Model Implementation: Incorporate the APY-G-1 into the mixed model equations:

[X'X X'Z; Z'X Z'Z + H^-1 * α] * [b; u] = [X'y; Z'y], whereH^-1is the augmented pedigree-genomic inverse using APY. - Validation: Perform k-fold cross-validation on young animals. Compare GEBVs from the APY model to those from a traditional model (if computationally feasible) using Pearson correlation and regression slope of observed on predicted.

Protocol 2: Validating Core Group Representativeness

Objective: To empirically test if the selected core group adequately captures the population's genetic diversity.

Methodology:

- Principal Component Analysis (PCA): Perform PCA on the full genotype matrix and on the core subset only. Visually compare the overlap of the core and non-core animals in the PC1 vs. PC2 space.

- Genetic Distance Metrics: Calculate the mean Mahalanobis distance from each non-core animal to the centroid of the core group. A low average distance indicates good representation.

- Accuracy Proxy: Calculate the Coefficient of Determination (CD) for each non-core animal as: CDi = giC GCC-1 gCi / gii. Report the mean CD for non-core animals. A mean CD > 0.8 is typically acceptable.

Mandatory Visualization

Title: APY Algorithm Implementation Workflow

Title: APY Sparse Inverse Matrix Structure

The Scientist's Toolkit

Table 3: Essential Research Reagent Solutions for APY-GBLUP Research

| Item / Software | Function in APY Research | Key Consideration |

|---|---|---|

| High-Density SNP Genotype Data | Raw input for constructing genomic relationship matrices. Data quality is paramount. | Standardized QC pipelines for call rate, MAF, and Hardy-Weinberg equilibrium. |

BLUPF90+ Suite (e.g., PREGSF90, APY) |

Standard software for implementing single-step GBLUP with the APY algorithm. | Open-source, actively maintained. Requires Fortran compiler and Linux environment. |

Core Selection Software (optiSel, R packages) |

Implements algorithms (CDmean, etc.) to select genetically representative core animals. | Integration with genomic data formats (PLINK, binary). Computational efficiency for large candidate sets. |

| High-Performance Computing (HPC) Cluster | Enables the heavy linear algebra computations (matrix inversions, MME solving). | RAM (≥64GB/node) is often more critical than CPU speed for large c. |

Genetic Relationship Matrix Kernel (e.g., G = ZZ' / 2∑p<sub>i</sub>(1-p<sub>i</sub>)) |

The foundational formula for constructing G from centered genotype matrix Z. |

Choice of scaling factor and handling of missing data can affect stability. |

| Validation Dataset | A subset of animals with high-reliability EBVs or progeny-tested records. | Used for cross-validation to measure predictive ability (correlation, bias) of the APY model. |

Application Notes: Core Assumptions & Mechanism of APY

The Algorithm for Proven and Young (APY) is a computational framework designed to enable genomic prediction for extremely large genotyped populations within the Genomic Best Linear Unbiased Prediction (GBLUP) model. Its core function is dimensionality reduction, making the inversion of the genomic relationship matrix (G) computationally feasible. This is achieved by strategically leveraging assumptions about genetic relationships.

The primary key assumption is that the genetic variation in a large population can be accurately represented by the genetic variation captured by a smaller, carefully chosen subset of individuals, termed the "core" group. The remaining "non-core" individuals are conditionally dependent on this core. This partitions the G matrix and uses the Schur complement for a sparse inverse.

Critical Assumptions:

- Conditional Independence of Non-Core Animals: Given the core group, the genomic values of non-core animals are assumed to be conditionally independent. This allows the inverse of the APY-modified G matrix (G_APYâ»Â¹) to be computed directly in sparse form.

- Sufficiency of the Core Subset: The core group must be of sufficient size and genetic diversity to act as an effective proxy for the entire population's allele frequency spectrum and linkage disequilibrium (LD) structure. A core size approximating the number of independent chromosome segments (Me) is theoretically required.

- Linear Additive Genetics: The model assumes the standard additive genetic architecture underlying GBLUP.

Quantitative Impact of Core Size Selection: Table 1: Simulated Impact of Core Group Size on Model Metrics

| Core Size (n) | % of Total Population | Computational Time for Gâ»Â¹ (hrs) | Predictive Ability (Corr.) | Bias (Regression Coeff.) |

|---|---|---|---|---|

| 5,000 | 5% | 0.5 | 0.58 | 0.96 |

| 10,000 | 10% | 1.1 | 0.62 | 0.98 |

| ~Me (15,000) | 15% | 2.5 | 0.63 | 1.01 |

| 25,000 | 25% | 8.7 | 0.63 | 1.00 |

| Full (100,000) | 100% | 48.0 (Infeasible) | 0.63 | 1.00 |

Theoretical Basis: The APY algorithm exploits the relationship gnon-core = P * gcore + ε, where P is a projection matrix, and ε is a residual genetic effect assumed to be independent across non-core individuals given the core.

Experimental Protocol: Implementing APY-GBLUP for Large-Scale Genomic Prediction

Objective: To perform genomic prediction for a population of 100,000 genotyped individuals using the APY algorithm to reduce the computational burden of inverting the Genomic Relationship Matrix.

Materials & Reagent Solutions: Table 2: Research Reagent Solutions & Computational Toolkit

| Item | Function/Description |

|---|---|

| High-Density SNP Genotype Data | Raw genomic data (e.g., 50K-800K SNPs) for all animals. Format: PLINK (.bed/.bim/.fam) or variant call format (.vcf). |

| G Matrix Construction Software | preGSf90 (BLUPF90 family) or calc_grm (Wombat). Calculates the genomic relationship matrix. |

| APY Implementation Software | BLUPF90+ (APY-specific modules), miXBLUP, or custom R/Python scripts using sparse matrix libraries. |

| Core Selection Algorithm | Script for selecting the core subset. Methods: random, maximally unrelated (based on G), or k-means clustering on principal components. |

| Phenotypic & Pedigree Data | File with de-regressed proofs (DRP) or observed phenotypes and full pedigree for blending with genomic relationships. |

| High-Performance Computing (HPC) Cluster | Essential for handling large-scale matrix operations. Requires significant RAM (>256 GB) and multi-core processors. |

Protocol Steps:

Step 1: Data Preparation & Quality Control

- Merge genotype data from all 100,000 individuals.

- Apply standard QC: filter SNPs for call rate (>0.95), minor allele frequency (>0.01), and Hardy-Weinberg equilibrium (P > 10â»â¶). Filter individuals for genotyping call rate (>0.90).

- Prepare phenotype files, ensuring proper alignment of animal IDs with genotype data.

Step 2: Selection of the Core Subset

- Estimate the effective number of chromosome segments (Me) for your population/species. Use formula: Me = (2NeL) / (log(4NeL)), where Ne is effective population size and L is genome length in Morgans.

- Set target core size (n_core) to be slightly greater than the estimated Me (e.g., Me + 10%).

- Execute core selection. Recommended protocol: Use principal component analysis (PCA) on the genotype matrix followed by k-means clustering to select

n_coreindividuals that maximize genetic diversity.- Code Snippet (R):

prcomp <- prcomp(genotype_matrix); clusters <- kmeans(prcomp$x[,1:10], centers=n_core); core_ids <- ...(select one per cluster).

- Code Snippet (R):

Step 3: Construction and Inversion of G_APY

- Construct the full genomic relationship matrix G using the method of VanRaden (Method 1).

- Partition G into blocks:

- Gcc (core-core)

- Gcn (core-noncore)

- G_nn (noncore-noncore)

- Compute the sparse inverse directly:

- GAPYâ»Â¹ = [ Gccâ»Â¹ + Gccâ»Â¹ Gcn Mâ»Â¹ Gnc Gccâ»Â¹, -Gccâ»Â¹ Gcn Mâ»Â¹ ] [ -Mâ»Â¹ Gnc Gccâ»Â¹, Mâ»Â¹ ] where M is a diagonal matrix with elements Mii = gnn,ii - g'nc,i Gccâ»Â¹ g_cn,i for each non-core animal i.

- Implement in software (e.g., BLUPF90): Provide a file listing core animal IDs. The software automatically performs the partitioned inverse.

Step 4: Solving the Mixed Model Equations

- Set up the standard GBLUP mixed model: y = Xb + Zu + e, where u ~ N(0, GAPY * σ²g).

- Replace the inverse of the usual G with G_APYâ»Â¹ in the mixed model equations.

- Iterate to solve for genomic estimated breeding values (GEBVs) for all 100,000 animals.

Step 5: Validation & Bias Assessment

- Perform k-fold cross-validation (e.g., 5-fold), ensuring families are split across folds.

- In each fold, predict GEBVs for validation animals using the model trained on the remaining animals.

- Calculate predictive ability (correlation between predicted and observed in validation) and bias (regression coefficient of observed on predicted).

Visualization of APY Logic and Workflow

APY Dimensionality Reduction Computational Workflow

APY Matrix Partitioning and Key Assumption

The Algorithm for Proven and Young (APY) animals is a pivotal method for reducing the computational burden of the genomic best linear unbiased prediction (GBLUP) model in large-scale genomic evaluations. Its efficacy is not inherent but contingent upon specific, high-quality data inputs and a cognizant analysis of population structure. This protocol details the foundational data prerequisites and structural analyses mandatory for robust APY implementation, directly supporting the thesis objective of enabling genome-wide prediction for millions of animals.

Core Data Requirements & Quantitative Specifications

Effective APY application partitions the population into a core (c) and a non-core (nc) group. The inverse of the genomic relationship matrix (Gâ»Â¹) is approximated using only the core animals, drastically reducing dimensionality. The quality of this approximation is fundamentally dependent on the data underpinning the G matrix.

Table 1: Minimum Data Requirements for APY-GBLUP Implementation

| Data Component | Specification | Purpose & Rationale |

|---|---|---|

| Genotype Data | High-density SNP array (e.g., > 50K SNPs). Quality control: Call Rate > 0.95, MAF > 0.01, Geno QC > 0.98. | Constructs an accurate genomic relationship matrix (G). Low-quality markers introduce noise, degrading prediction accuracy. |

| Phenotype Data | Reliable, pre-adjusted records for the target trait(s). Contemporary groups should be clearly defined. | Provides the vector of observations for solving the mixed model equations. Data should be free of systematic environmental bias. |

| Pedigree Information | Complete, validated pedigree for at least 2-3 generations. | Used optionally to create a pedigree relationship matrix (A) for blending with G (e.g., ssGBLUP) or for validation. |

| Core Subset Size | Minimum: sqrt(Total Genotyped Population). Optimal: 5,000 - 15,000, population-dependent. | Must be sufficiently large to capture the majority of genetic variation and linkage disequilibrium (LD) patterns in the full population. |

| Core Selection | Based on genetic diversity (e.g., maximizing relationships). Not random. | Ensures the core is a genetic "anchor" that reliably represents the allelic distribution of the non-core animals. |

Protocol: Analysis of Population Structure for Core Selection

A non-random, algorithmically selected core is critical. This protocol outlines a method for core selection based on maximizing genetic connections.

Experimental Protocol: Optimal Core Selection via Genetic Diversity Analysis

- Input: Genotype matrix (M) of dimensions n (animals) x m (SNPs), coded as 0,1,2.

- Calculate the Genomic Relationship Matrix (G):

- Standardize the genotype matrix:

Z = M - P, where P is a matrix of twice the allele frequency (2pᵢ). - Compute G = (Z Zᵀ) / [2 Σ pᵢ(1-pᵢ)], as per VanRaden (2008).

- Standardize the genotype matrix:

- Implement Core Selection Algorithm (Example: Greedy Algorithm):

- Step 1: Initialize an empty core list.

- Step 2: Calculate the diagonal of G (represents self-relationship). Select the animal with the highest value as the first core animal.

- Step 3: For each remaining candidate animal, calculate its average relationship to the current core set.

- Step 4: Select the animal with the highest average relationship to the current core and add it to the core set. This maximizes the core's connectivity.

- Step 5: Repeat Steps 3-4 until the predefined core size (e.g., 10,000) is reached.

- Validation Step: Perform Principal Component Analysis (PCA) on the full genotype matrix. Visualize the first two PCs, color-coding core (red) and non-core (blue) animals. The core should be centrally located and span the genetic space of the population.

Diagram 1: Workflow for Population Structuring and APY

Protocol: Validating APY Approximation Accuracy

Once the core is selected, the accuracy of the APY approximation (G_APYâ»Â¹) must be validated before full-scale deployment.

Experimental Protocol: Validation of G_APYâ»Â¹ Against Direct Gâ»Â¹

- Create a Test Dataset: Randomly sample a manageable subpopulation (n ≈ 5,000) from your full dataset. Ensure it includes animals from both core and non-core groups.

- Compute Benchmark Matrix:

- Construct the full genomic relationship matrix (Gfull) for the test dataset.

- Compute its direct inverse (Gfullâ»Â¹) using standard methods (e.g., Cholesky decomposition). This is your gold standard.

- Compute APY Approximation:

- Within the test dataset, re-designate the core/non-core animals based on your selection method.

- Apply the APY algorithm to construct the approximate inverse (G_APYâ»Â¹).

- Metrics for Comparison:

- Matrix Correlation: Calculate the Pearson correlation between the off-diagonal elements of

G_fullâ»Â¹andG_APYâ»Â¹. - Key Statistic Comparison: From the mixed model equations, compare the estimated genomic breeding values (GEBVs) using both matrices. The correlation between the two sets of GEBVs should be >0.99.

- Computational Time: Record the time to compute

G_APYâ»Â¹vsG_fullâ»Â¹.

- Matrix Correlation: Calculate the Pearson correlation between the off-diagonal elements of

Table 2: Example Validation Results (Simulated Data)

| Validation Metric | Target Threshold | Example Outcome | Interpretation |

|---|---|---|---|

| Matrix Correlation (off-diagonals) | > 0.98 | 0.991 | Excellent approximation of relational information. |

| GEBV Correlation | > 0.99 | 0.998 | Negligible impact on final predictions. |

| Time to Invert (n=5,000) | Reduction > 80% | Direct: 120s, APY: 18s | 85% reduction in compute time achieved. |

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Reagents and Computational Tools for APY Implementation

| Item / Solution | Function / Purpose |

|---|---|

| High-Density SNP Genotyping Array | Platform for generating standardized genomic data (e.g., Illumina BovineHD, PorcineGGP). |

| Genotype QC Pipeline (e.g., PLINK, bcftools) | Software for filtering SNPs and samples based on call rate, MAF, and Hardy-Weinberg equilibrium. |

| Genomic Relationship Matrix Software (PREGSF90, GCTA) | Specialized tools to efficiently construct the G matrix from large genotype files. |

| APY-Specific Software (APY90, BLUPF90+) | Optimized software packages that implement the APY algorithm for ssGBLUP/GBLUP models. |

| High-Performance Computing (HPC) Cluster | Essential for handling memory-intensive matrix operations and solving mixed models for large N. |

| Principal Component Analysis Tool (GCTA, R) | Used to visualize population genetic structure and validate core selection. |

Diagram 2: APY Logic in the GBLUP Pathway

Step-by-Step Implementation: Building and Applying APY-GBLUP in Practice

Within the context of research on the APY (Algorithm for Proven and Young) algorithm for large-scale Genomic Best Linear Unbiased Prediction (GBLUP) implementation, the critical first step is the strategic partitioning of the genotyped population into 'Core' and 'Non-Core' (Young) subsets. This partitioning is fundamental to achieving computational tractability while maintaining high accuracy in genomic predictions. The APY algorithm approximates the inverse of the genomic relationship matrix (Gâ»Â¹) by leveraging a core subset of animals, thereby bypassing the need to invert the entire, potentially massive, G matrix. This document provides application notes and detailed protocols for defining and selecting these subsets.

Key Concepts and Quantitative Data

The efficacy of the APY approximation hinges on the composition and size of the Core subset. The Core must be chosen to collectively represent the genetic diversity and linkage disequilibrium (LD) patterns of the entire population. The Non-Core (Young) subset typically consists of more recently genotyped individuals (e.g., young selection candidates) with genetic relationships defined primarily through the Core.

Table 1: Summary of Core Subset Selection Criteria and Impact

| Criterion | Description | Typical Target/Value | Rationale |

|---|---|---|---|

| Genetic Diversity | Core animals should span the principal components of genetic space. | Maximize allelic representation. | Ensures the Core captures population-wide LD structure. |

| Minimizing Relatives | Select animals to minimize close familial relationships within the Core. | Avoid parent-offspring or full-sib pairs. | Reduces redundancy and improves numerical stability. |

| Core Size (NC) | Number of individuals in the Core subset. | ~10,000 or sqrt(Total Genotyped Population) | Balances computational cost (O(NC³)) with approximation accuracy. |

| Effective Population Size (Ne) | A parameter influencing required Core size. | Species/breed specific (e.g., Dairy Cattle Ne ~ 100). | Larger Ne may require a larger Core for adequate representation. |

| Prediction Accuracy | Correlation between APY-GBLUP and traditional GBLUP EBVs. | Target > 0.99 for proven animals. | Primary metric for validating subset selection. |

Table 2: Comparison of Core Selection Methods

| Method | Protocol Summary | Advantages | Limitations |

|---|---|---|---|

| Random Selection | Simple random sampling from the entire genotyped pool. | Fast, unbiased, easy to implement. | May miss rare alleles; inefficient for small Core sizes. |

| K-Means Clustering | Cluster animals in genetic PC space, then sample from each cluster. | Actively ensures diversity coverage. | Computationally heavy for initial clustering. |

| Average Relationship | Iteratively select animals with lowest average relationship to already chosen Core. | Minimizes redundancy effectively. | Sequential process; can be slow for large datasets. |

| Algorithmic Optimization | Use mixed-model equations or graph theory to optimize a determinantal criterion. | Potentially optimal for a given Core size. | Complex implementation; high computational demand. |

Experimental Protocols

Protocol 1: Defining the Non-Core (Young) Subset

Objective: To identify all genotyped individuals that will not be part of the Core matrix inversion in the APY algorithm.

- Data Requirement: A complete list of all genotyped animals with their genotype IDs and birth dates.

- Selection Rule: Apply a birth date cutoff. All animals born after a specific date (e.g., the last five years) are automatically assigned to the Non-Core subset.

- Supplementary Rule: Animals born before the cutoff but with no progeny or own performance records (i.e., "young" in a genetic evaluation context) can also be assigned to Non-Core.

- Output: A vector

Young_IDscontaining the unique identifiers for all Non-Core animals.

Protocol 2: Selecting the Core Subset via K-Means Clustering on Genetic Principal Components

Objective: To select a genetically diverse Core subset of size k.

- Input Data: A genomic relationship matrix (G) or a matrix of genotype dosages (0,1,2) for all m markers and n animals (excluding those already in the Non-Core subset).

- Perform PCA: a. Standardize the genotype matrix (column mean=0, variance=1). b. Compute the n x n covariance matrix. c. Perform eigen-decomposition to obtain the first p principal components (PCs). (Typically, p = 50-100 captures sufficient variance). d. Result is an n x p matrix of PC scores.

- K-Means Clustering: a. Set the number of clusters equal to the desired Core size, NC. b. Apply the K-means algorithm to the PC score matrix, iterating until cluster centroids stabilize. c. Each of the NC clusters defines a region in genetic space.

- Select Core Animals:

a. Within each cluster, select the animal closest to the cluster centroid (in Euclidean distance).

b. Alternatively, randomly sample one animal from each cluster.

c. Compile the selected animal IDs into the

Core_IDsvector. - Validation: Visually inspect the Core and Non-Core selections on a PC1 vs. PC2 plot to ensure broad coverage.

Protocol 3: Validating Core/Non-Core Partition for APY-GBLUP

Objective: To verify that the selected subsets yield an accurate APY approximation.

- Construct Matrices: Build the full G matrix and the APY-approximated GAPYâ»Â¹ using the selected

Core_IDs. - Benchmark Analysis: Run a single-trait GBLUP analysis on a dataset with known EBVs using: a. The full Gâ»Â¹ (if computationally feasible). b. The GAPYâ»Â¹.

- Evaluate: Calculate the correlation between Estimated Breeding Values (EBVs) from (a) and (b) for animals in a validation set (e.g., older Non-Core animals). Target correlation > 0.99.

- Computational Benchmark: Record the time and memory usage for both methods to quantify efficiency gains.

Visualizations

Diagram 1: Workflow for Defining Core and Non-Core Subsets.

Diagram 2: Matrix Partitioning in the APY Algorithm.

The Scientist's Toolkit

Table 3: Essential Research Reagents and Materials for Core/Non-Core Analysis

| Item | Function / Purpose | Example / Specification |

|---|---|---|

| Genotype Data | Raw input for relationship matrix calculation. | SNP array (e.g., Illumina BovineHD 777K) or whole-genome sequencing variant call format (VCF) files. |

| High-Performance Computing (HPC) Cluster | Essential for handling large-scale genomic data matrices and iterative algorithms. | Cluster with >= 64 cores, 512GB RAM, and parallel computing software (e.g., OpenMPI). |

| Statistical Software Suite | For data manipulation, PCA, clustering, and matrix operations. | R (with rrBLUP, cluster, SNPRelate packages), Python (with numpy, scikit-learn, pygwas libraries). |

| Genetic Relationship Matrix Calculator | Software to efficiently construct the G matrix from genotype data. | PLINK 2.0, GCTA, or custom scripts using BLAS-optimized libraries. |

| Core Selection Algorithm Scripts | Custom implementation of selection protocols (e.g., K-Means on PCs). | Python/R scripts encapsulating Protocol 2. |

| APY-GBLUP Solver Software | Specialized software that implements the APY algorithm for mixed model equations. | Custom Fortran/C++ programs, or the APY module in BLUPF90 suite. |

| Data Visualization Tool | To visualize genetic PCA and subset selection outcomes. | R ggplot2, Python matplotlib/seaborn. |

Within the broader thesis on enabling large-scale Genomic Best Linear Unbiased Prediction (GBBLUP), the APY (Algorithm for Proven and Young animals) algorithm is a cornerstone methodology for managing the computational burden of inverting the genomic relationship matrix (G). This step constructs the partitioned G matrix (G_APY), which allows for an efficient inverse, facilitating the analysis of millions of genotypes in livestock, plant, and human genetics, with significant implications for genomic selection in pharmaceutical target discovery and breeding.

Core Computational Principle

The APY algorithm partitions animals into two groups: core (c) and non-core (n). The core group is a subset of genotyped individuals chosen to represent the genetic variation of the entire population. The genomic relationship matrix is then partitioned and its inverse is calculated indirectly using the Sherman-Morrison-Woodbury formula, reducing computational complexity from O(m³) to O(c³), where m is the total number of animals and c is the core size (c << m).

Foundational Equation

The inverse of GAPY is computed as: [ G{APY}^{-1} = \begin{bmatrix} G{cc}^{-1} & 0 \ 0 & 0 \end{bmatrix} + \begin{bmatrix} -G{cc}^{-1} G{cn} \ In \end{bmatrix} M{nn}^{-1} \begin{bmatrix} -G{nc} G{cc}^{-1} & In \end{bmatrix} ] where ( M{nn} = diag{ di } ) and ( di = g{ii} - g{i,core} G{cc}^{-1} g_{core,i} ).

Application Notes & Protocol

Core Selection Protocol

The choice of the core subset is critical. Multiple strategies exist, with varying impacts on accuracy.

Protocol:

- Input: A dense, genome-wide SNP matrix (Z) for m individuals, centered and scaled.

- Objective: Select c individuals (e.g., 5,000-10,000 from 100,000+).

- Method Options:

- Random Selection: Simple baseline. Randomly sample c individuals.

- Algorithmic Selection (Recommended): a. Perform principal component analysis (PCA) or singular value decomposition (SVD) on the full SNP matrix (Z). b. Apply the k-means++ clustering algorithm on the first 50-100 principal components. c. Designate the individuals closest to each cluster centroid as core members. d. Validate that the chosen core spans the PC space of the full population.

- Output: A list of c individual IDs designated as the core group.

Matrix Partitioning & Construction Protocol

This protocol details the computation of the partitioned G matrix itself.

Protocol:

- Compute the Standard G Matrix: Use the first method of VanRaden (2008): ( G = \frac{ZZ'}{k} ), where Z is the centered/scaled SNP matrix and k is a scaling constant (usually 2Σpi(1-pi)).

- Partition G: Reorder G such that core individuals are first.

[

G = \begin{bmatrix}

G{cc} & G{cn} \

G{nc} & G{nn}

\end{bmatrix}

]

- ( G{cc} ) (c x c): Relationships among core individuals.

- ( G{cn} ) (c x n): Relationships between core and non-core.

- ( G_{nn} ) (n x n): Relationships among non-core individuals (not explicitly computed in full).

- Compute ( G_{cc}^{-1} ): Perform a direct inversion of the c x c matrix using Cholesky decomposition (LAPACK

dpotrf/dpotriroutines). - Compute the Diagonal Matrix ( M{nn} ): a. For each non-core individual *i*: b. Extract the vector ( g{i,core} ) (1 x c) from ( G{nc} ). c. Calculate: ( di = g{ii} - g{i,core} * (G{cc}^{-1} * g{core,i}^T) ). d. Populate ( M{nn} ) as a diagonal matrix with elements ( di ).

- Assemble ( G_{APY}^{-1} ): Use the Foundational Equation above to construct the sparse inverse directly. This inverse can be used directly in mixed model equations for GBLUP.

Quantitative Performance Data

Table 1: Computational Efficiency of G_APY vs. Full G Inversion

| Population Size (m) | Core Size (c) | Full G Inversion Time (hrs) | G_APY Inversion Time (hrs) | Memory Usage Reduction (%) | Predictive Accuracy (r) |

|---|---|---|---|---|---|

| 50,000 | 6,000 | 8.2 | 0.5 | 75 | 0.73 |

| 100,000 | 8,000 | 64.0 | 1.1 | 84 | 0.71 |

| 500,000 | 10,000 | (Infeasible) | 4.8 | 96 | 0.68 |

| 1,000,000 | 15,000 | (Infeasible) | 12.5 | 98 | 0.66 |

Note: Times are approximate and hardware-dependent. Accuracy is correlation between genomic and observed breeding values.

Diagrams

The Scientist's Toolkit

Table 2: Essential Research Reagent Solutions & Computational Tools

| Item/Category | Specific Example/Software | Function in G_APY Construction |

|---|---|---|

| Genotype Data Management | PLINK, BCFtools, GCTA | Formats, filters, and manipulates raw SNP data into the centered/scaled Z matrix. |

| Core Selection Algorithm | scikit-learn (Python), R stats |

Implements PCA and k-means++ clustering for optimal, representative core selection. |

| High-Performance Linear Algebra | Intel MKL, OpenBLAS, LAPACK | Provides optimized routines (Cholesky: dpotrf, inversion: dpotri) for inverting G_cc. |

| Sparse Matrix Library | Eigen (C++), SciPy.sparse (Python) | Efficiently stores and computes the final sparse G_APYâ»Â¹ matrix. |

| Programming Environment | R (rrBLUP, APY), Python (NumPy, pandas), Fortran |

Scripting environment to implement the APY protocol and integrate with GBLUP solvers. |

| Validation Software | PREGSf90, BLUPF90 family, custom scripts | Runs GBLUP with G_APYâ»Â¹ to validate predictive accuracy and computational gains. |

Application Notes

Within the broader thesis on the APY (Algorithm for Proven and Young) algorithm for large-scale Genomic Best Linear Unbiased Prediction (GBLUP) implementation, Step 3 is the computational keystone. The APY algorithm overcomes the cubic computational bottleneck (O(n³)) of inverting the standard genomic relationship matrix (G) by partitioning animals into a core group (c) and a non-core group (n). This allows for the computation of G_APYâ»Â¹ directly in linear time relative to the number of genotyped individuals.

The critical formula for achieving this is: GAPYâ»Â¹ = M = [ Mcc Mcn Mnc M_nn ], where:

- Mcc = Gccâ»Â¹ + Gccâ»Â¹ Gcn Dâ»Â¹ Gnc Gccâ»Â¹

- Mcn = -Gccâ»Â¹ G_cn Dâ»Â¹

- Mnc = Mcn′

- Mnn = Dâ»Â¹ and D = diag(dii) with dii = gii - g′i,c Gccâ»Â¹ gi,c. Here, gii is the diagonal element of G for non-core individual i, and g_i,c is the vector of genomic relationships between non-core individual i and all core individuals.

Key Quantitative Summary:

Table 1: Computational Complexity Comparison of Gâ»Â¹ Calculation Methods

| Method | Time Complexity | Space Complexity | Scalability for n > 100k |

|---|---|---|---|

| Direct Inversion | O(n³) | O(n²) | Infeasible |

| APY Inversion (Step 3) | O(n * c²) | O(n * c) | Feasible |

| c = 10k, n = 500k | ~50 trillion ops | ~25 TB | Not Feasible |

| c = 5k, n = 500k | ~12.5 billion ops | ~2.5 GB | Feasible |

Table 2: Impact of Core Size Selection on Inverse Computation

| Core Selection Method | Core Size (c) | Approximation Error | Relative Runtime |

|---|---|---|---|

| Random | 5,000 | Baseline | 1.00 |

| Maximally Related | 5,000 | Reduced by ~15% | 1.02 |

| Minimally Related | 5,000 | Increased by ~20% | 0.99 |

| Based on Genetic Algorithm | 4,000 | Reduced by ~12% | 0.85 |

Experimental Protocols

Protocol 1: Validating the Linearity of the APY Inverse Computation Objective: To empirically demonstrate that computing G_APYâ»Â¹ scales linearly with the total number of genotyped animals (n). Materials: Genotype data (e.g., 50k SNP chip) for n = [10k, 50k, 100k, 500k] individuals. High-performance computing node with ≥ 32 cores and 256 GB RAM. Method:

- For each population size n, randomly select a fixed core (c = 5,000 animals).

- Construct the full G matrix using method 1 of VanRaden (2008).

- Partition G into sub-matrices Gcc, Gcn, G_nc.

- Invert Gcc directly (Gccâ»Â¹) using a standard library (e.g., Intel MKL).

- For each non-core animal i, calculate dii = gii - g′i,c Gccâ»Â¹ g_i,c. This is an O(c²) operation per animal.

- Assemble Dâ»Â¹ as a diagonal matrix.

- Compute the four blocks of M (G_APYâ»Â¹) using the critical formula.

- Record the total wall-clock time for Step 3 only (from 4 to 7). Analysis: Plot n vs. computation time. Fit a linear regression to confirm O(n) scaling. Compare the predicted breeding values (EBVs) from a GBLUP model using G_APYâ»Â¹ vs. a direct Gâ»Â¹ for a subset where direct inversion is possible (n < 20k).

Protocol 2: Benchmarking Accuracy Against Direct Inversion Objective: To quantify the numerical accuracy of G_APYâ»Â¹. Materials: A subset of n = 15,000 animals where direct inversion of G is computationally tractable. Method:

- Compute the true inverse, G_fullâ»Â¹, via Cholesky decomposition.

- Run Protocol 1 for the same data with varying core sizes (c = 2k, 4k, 6k, 8k) and selection methods (random, maximally unrelated).

- For each resulting GAPYâ»Â¹, calculate the Frobenius norm of the difference: || GAPYâ»Â¹ - Gfullâ»Â¹ ||F.

- Also compute the correlation between the vector of EBVs obtained from models using GAPYâ»Â¹ and Gfullâ»Â¹. Analysis: Create a table of Frobenius norms and EBV correlations versus core size/selection. Determine the minimal core size required for correlation > 0.995.

Visualizations

Title: Computational Workflow for Linear-Time G_APY Inverse

Title: Structure of G_APYâ»Â¹ Matrix and its Components

The Scientist's Toolkit

Table 3: Essential Research Reagent Solutions for APY Implementation

| Item | Function in APY Research |

|---|---|

| High-Density SNP Chip Data (e.g., Illumina BovineHD) | Raw genotype data for constructing the genomic relationship matrix G. Quality control (call rate, MAF) is essential. |

| Core Selection Script (Python/R) | Algorithm to select the c core animals. Functions may include random sampling, maximizing genetic diversity, or optimizing predictive ability. |

| BLAS/LAPACK Libraries (Intel MKL, OpenBLAS) | Optimized linear algebra libraries for the critical O(c³) inversion of G_cc and the numerous matrix multiplications. |

| Parallel Computing Framework (MPI, OpenMP) | Enables distribution of the d_ii calculations for each non-core animal (the O(n c²) loop) across multiple CPU cores/nodes. |

| Numerical Validation Suite | Scripts to compute Frobenius norms, correlations, and matrix conditioning numbers to benchmark GAPYâ»Â¹ against Gfullâ»Â¹. |

| GBLUP Solver (e.g., preGSf90, BLUPF90) | Production-grade software to integrate the computed G_APYâ»Â¹ into the mixed model equations for genomic prediction. |

The APY (Algorithm for Proven and Young) algorithm is a core computational strategy for enabling genomic BLUP (GBLUP) in massive-scale genotyped populations. Its primary innovation is the approximation of the inverse of the genomic relationship matrix (Gâ»Â¹) by partitioning animals into "core" (c) and "non-core" (nc) groups. This reduces the computational complexity from cubic to linear relative to the number of genotyped individuals.

The APY inverse is defined as: G*APYâ»Â¹ = [ Gccâ»Â¹ 0 ; 0 0 ] + [ -Gccâ»Â¹ Gcn ; I ] Mnnâ»Â¹ [ -Gnc Gccâ»Â¹ I ] where Mnn = diag{ gii - g'ic Gccâ»Â¹ gic } for i ∈ non-core.

Integration into the Mixed Model Equations (MME) for the standard GBLUP model y = Xb + Zu + e (where u ~ N(0, Gσ²u)) is performed by directly substituting the constructed G*APYâ»Â¹ for Gâ»Â¹ in the MME.

Table 1: Computational Complexity Comparison of Inverse Methods

| Method | Operation Complexity for n Animals | Memory for Gâ»Â¹ | Suitable Population Size |

|---|---|---|---|

| Direct Inversion | O(n³) | O(n²) | < 50,000 |

| Traditional APY | O(n * c²) | O(n * c) | 50,000 - 1,000,000+ |

| APY-GBLUP in MME | O(n * c²) | O(n * c) | 50,000 - 1,000,000+ |

Table 2: Impact of Core Size Selection on APY-GBLUP Accuracy

| Core Selection Method | Core Size (c) | Approximation Error* | Relative Comp. Time | Predictive Ability (r) |

|---|---|---|---|---|

| Random | 5,000 | 8.7 x 10â»Â² | 1.00 (Baseline) | 0.579 |

| Random | 10,000 | 5.2 x 10â»Â³ | 3.85 | 0.615 |

| Based on Effective Record Contributions | 10,000 | 4.1 x 10â»Â³ | 3.85 | 0.621 |

| Based on Genetic Algorithm | 8,000 | 3.8 x 10â»Â³ | 2.56 | 0.623 |

*Measured as mean squared difference between APY G and full G matrix elements.

Detailed Experimental Protocols

Protocol 3.1: Constructing the APY Inverse for MME Integration

Objective: To compute G_APYâ»Â¹ for substitution in GBLUP MME. Materials: Genotype matrix (Z) for *n animals and m SNPs. Procedure:

- Standardize Genotypes: Calculate the genomic relationship matrix G using method 1 of VanRaden (2008): G = ZZ' / 2∑pᵢ(1-pᵢ), where pᵢ is allele frequency at SNP i.

- Partition Animals: Divide the n animals into c core and n-c non-core animals. Core selection can be random, based on genetic diversity, or optimal contribution selection.

- Partition G Matrix: Organize G into submatrices: G = [ Gcc, Gcn ; Gnc, Gnn ].

- Invert Core Submatrix: Compute G_ccâ»Â¹ via Cholesky decomposition.

- Calculate Diagonal Matrix Mnn: For each non-core animal *i*, compute: mii = gii - g'ic Gccâ»Â¹ gic, where g_ic is the vector of relationships between non-core animal i and all core animals.

- Assemble APY Inverse: Construct G*_APYâ»Â¹ using the formula in Section 1. Store it in sparse format.

Protocol 3.2: Solving APY-GBLUP Mixed Model Equations

Objective: To obtain genomic estimated breeding values (GEBVs) using APY. Materials: Phenotype vector (y), design matrices X and Z, variance components (σ²u, σ²e), and G*_APYâ»Â¹. Procedure:

- Define MME: Set up the MME with the APY inverse: [ X'X X'Z ] [ bÌ‚ ] = [ X'y ] [ Z'X Z'Z + λ (G*APYâ»Â¹) ] [ û ] [ Z'y ] where λ = σ²e / σ²_u.

- Apply Preconditioned Conjugate Gradient (PCG): Due to the large, sparse structure of the coefficient matrix, solve the MME using PCG iteration. a. Set initial guesses for bÌ‚ and û. b. Compute the residual vector r. c. Use a diagonal preconditioner (inverse of the diagonal of the MME matrix). d. Iterate until convergence (e.g., ||r||² / ||rhs||² < 10â»Â¹Â²).

- Extract Solution: The converged û vector contains the GEBVs for all n animals.

Protocol 3.3: Validating APY-GBLUP Implementation

Objective: To verify computational efficiency and predictive accuracy. Materials: A dataset with known pedigree, genotypes, and phenotypes. Procedure:

- Cross-Validation: Perform k-fold (e.g., 5-fold) cross-validation, masking phenotypes for animals in the validation set.

- Run Models: Execute both a traditional GBLUP (using direct Gâ»Â¹ if possible) and the APY-GBLUP for each fold.

- Assess Metrics:

- Accuracy: Correlate GEBVs with adjusted phenotypes in the validation set.

- Bias: Regress adjusted phenotypes on GEBVs; slope should be ~1.

- Computational Performance: Record wall-clock time and peak memory usage for both models.

- Compare: Use paired t-tests across folds to compare accuracy and bias between the full and APY models.

Mandatory Visualizations

Title: APY Inverse Construction Workflow for GBLUP

Title: MME Integration and Solution Process for APY-GBLUP

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational Tools for APY-GBLUP Implementation

| Item / Software | Category | Function in APY-GBLUP Research |

|---|---|---|

| PLINK 2.0 | Genotype Data Management | Processes raw genotype data, performs quality control (QC), and calculates allele frequencies essential for building G. |

| BLAS/LAPACK Libraries (Intel MKL, OpenBLAS) | Numerical Computation | Provides optimized routines for the dense matrix operations (e.g., G_cc inversion) within the APY algorithm. |

| Preconditioned Conjugate Gradient (PCG) Solver | Linear Algebra Solver | The iterative method of choice for solving the large, sparse MME after integrating G*_APYâ»Â¹. Custom implementation is often required. |

| Sparse Matrix Format (CSR, CSC) | Data Structure | Efficiently stores the block-diagonal G*_APYâ»Â¹ in memory, crucial for handling millions of animals. |

| Core Selection Scripts (R, Python) | Analysis Pipeline | Implements algorithms (random, genetic diversity, optimization) to define the core group, impacting approximation accuracy. |

| Variance Component Estimation Software (AIREMLF90, DMU) | Statistical Genetics | Estimates σ²u and σ²e prior to setting up MME. Can be run on a subset of data before full APY-GBLUP application. |

| High-Performance Computing (HPC) Cluster | Infrastructure | Provides the necessary parallel computing resources and memory to run APY-GBLUP on national-scale genotypes. |

This Application Note provides a practical guide for implementing Genomic Best Linear Unbiased Prediction (GBLUP) at scale, a core component for genomic prediction and genome-wide association studies (GWAS) in large cohorts. Efficient computation of the Genomic Relationship Matrix (GRM) and solving the associated mixed linear models are primary bottlenecks. Within the broader research thesis on the APY (Algorithm for Proven and Young) algorithm, these protocols demonstrate its utility in reducing computational complexity from cubic to linear for genotyped individuals, enabling analysis of cohorts exceeding 1 million individuals or organisms by exploiting inherent genetic core-periphery structures.

Key Computational Workflow

The standard GBLUP model is defined as y = Xb + Zu + e, where y is the phenotypic vector, b is the vector of fixed effects, u is the vector of genomic breeding values ~N(0, Gσ²_u), and e is the residual. G is the GRM. The APY algorithm partitions individuals into core (c) and non-core (n) groups, approximating Gâ»Â¹ as:

where M = -G_nc * G_ccâ»Â¹. This allows for inverse operations only on the core subset.

Diagram 1: APY-GBLUP computational workflow for large cohorts.

Protocol 1: Constructing the APY-Inverse for a Large Cohort

Objective: To construct the approximate inverse of the Genomic Relationship Matrix (G) for a cohort of 500,000 dairy cattle using the APY algorithm.

Materials & Software:

- High-performance computing cluster (Linux).

- Genotype files in PLINK 1.9 binary format (

cohort.bed,cohort.bim,cohort.fam). - Phenotypic data file (

phenotypes.txt). - Software:

PLINK 1.9/2.0,preGSF90(or equivalent BLUPF90 family), custom R/Python scripts.

Procedure:

- Quality Control (QC) and Core Selection:

- Perform standard QC on genotype data:

plink --bfile cohort --maf 0.01 --geno 0.05 --mind 0.05 --make-bed --out cohort_qc. - Select the core group. This can be based on:

- Algorithmic: K-means clustering on principal components (PCs) from

plink --pca. - Experimental Design: Animals with the most reliable, high-accuracy EBVs or those representing founder lineages.

- Arbitrary but systematic: Random selection ensuring representation across herds and birth years.

- Algorithmic: K-means clustering on principal components (PCs) from

- Create a text file

core_ids.txtwith the IDs of the selected 10,000 core animals.

- Perform standard QC on genotype data:

Calculate Gcc and Gnc:

- Use the

--make-grm-partand--make-grm-binoptions inpreGSF90orGCTAto efficiently compute GRM partitions. A custom R script using therrBLUPpackage can also be used:

# Center and scale genotype matrix (VanRaden Method 1) p <- colMeans(Z) / 2 Z_scaled <- scale(Z, center=2p, scale=sqrt(2p*(1-p)))

# Calculate submatrices Gcc <- tcrossprod(Zscaled[coreidx,]) / ncol(Zscaled) Gnc <- tcrossprod(Zscaled[noncoreidx,], Zscaled[coreidx,]) / ncol(Zscaled) diag(Gcc) <- diag(Gcc) + 0.01 # Add small regularization for invertibility- Use the

Form G_APYâ»Â¹:

- Invert

G_ccand apply the APY formula.

# Construct full sparse inverse (using Matrix package for efficiency) library(Matrix) ntotal <- nrow(Z) ncore <- length(coreidx) nnc <- length(noncore_idx)

# Top-left block TL <- bdiag(Gccinv, Matrix(0, nnc, nnc))

# Bottom-right contribution M <- rbind(-Gnc %*% Gccinv, Diagonal(nnc)) BR <- t(M) %% G_nn_diag_inv %% M

GAPYinv <- TL + BR rownames(GAPYinv) <- colnames(GAPYinv) <- c(rownames(Z)[coreidx], rownames(Z)[noncoreidx]) writeMM(GAPYinv, file="GAPYinv.mtx") # Write in Matrix Market format- Invert

Protocol 2: Solving the Large-Scale GBLUP Mixed Model

Objective: To estimate genomic breeding values (GEBVs) for milk yield using the APY-derived inverse relationship matrix.

Procedure:

- Prepare Parameter File for BLUPF90:

- Create a parameter file

param_APY.txtfor software likeblupf90+orAIRMLEf90.

- Create a parameter file

Execute the Analysis:

- Run the solver:

blupf90+ param_APY.txt. The use ofG_APY_inv.mtxdirectly replaces the need to compute the fullGâ»Â¹.

- Run the solver:

Validate Approximation:

- For a random subset of 2,000 animals, compare GEBVs from the full model (if computationally feasible) and the APY model. Calculate the correlation and regression slope.

Results and Performance Data

Table 1: Computational Performance: Full GBLUP vs. APY-GBLUP

| Metric | Full GBLUP (N=50k Subset) | APY-GBLUP (N=500k, Core=10k) | Notes |

|---|---|---|---|

| GRM Construction Time | ~4.5 hours | ~2 hours (Core) + ~5 hours (N-C) | Parallelized on 32 cores. |

| Matrix Inversion Time | ~6 hours (Memory: 20GB) | ~2 minutes (for G_cc) | APY avoids large matrix inversion. |

| Mixed Model Solve Time | ~1.5 hours | ~45 minutes | Using iterative solver (PCG). |

| Total Wall-Clock Time | ~12 hours | ~8 hours | Enables scaling beyond RAM limits. |

| Estimated Correlation (GEBVs) | 1.00 (Reference) | 0.993 (± 0.004) | Validation on independent subset. |

Table 2: Research Reagent Solutions Toolkit

| Item | Function/Description | Example Product/Code |

|---|---|---|

| Genotype Dataset | Raw SNP data for GRM construction. | PLINK binary files (.bed, .bim, .fam) |

| Phenotype File | Trait measurements and fixed effects. | CSV file with IDs, covariates, phenotypes |

| Core Selection Script | Algorithmically defines the core subset. | Python script for K-means on PCs |

| APY Inverse Constructor | Computes G_APYâ»Â¹ from genotype partitions. | Custom R script (as in Protocol 1) |

| Sparse Matrix Library | Handles large, sparse G_APYâ»Â¹ efficiently. | R Matrix package; Python scipy.sparse |

| Mixed Model Solver | Solves HMM for GEBV estimation. | BLUPF90 family, GCTA, or custom PCG solver |

| Validation Pipeline | Compares model outputs for accuracy. | R Markdown script calculating correlations & biases |

Diagram 2: Genetic space partitioning in the APY algorithm.

The Algorithm for Proven and Young (APY) is a pivotal methodological advancement enabling the practical application of Genomic Best Linear Unbiased Prediction (GBLUP) to very large genotyped populations. By partitioning animals into core and non-core groups, APY leverages the principle that the genomic information of non-core animals can be approximated through their relationships to a carefully chosen core subset. This reduces the computational complexity of inverting the genomic relationship matrix (G) from cubic to linear order relative to the number of non-core animals. Within the broader thesis on APY algorithm research, this document provides detailed application notes and protocols for its implementation in three key software environments: the industry-standard BLUPF90 suite, the commercial package ASReml, and through custom R/Python scripts for maximum flexibility and research insight.

Comparative Software Implementation

| Feature / Aspect | BLUPF90 Suite (preGSf90, blupf90+) | ASReml (v4.2+) | Custom R/Python Scripts |

|---|---|---|---|

| Core APY Functionality | Native, integrated via OPTION SNP_file APY |

Native via ginverse() and gsoln() |

Fully manual, researcher-implemented |

| Primary Use Case | Large-scale national genetic evaluations | Experimental breeding research, plant/animal | Algorithm development, benchmarking, prototyping |

| Key Strength | Extremely efficient for millions of animals | Seamless integration with mixed model formulas | Complete control, transparency, and customization |

| Computational Engine | Fortran-based, highly optimized | Proprietary, highly efficient | R: Matrix/rrBLUP; Python: NumPy/SciPy/pySPACE |

| Core Selection Methods | Simple (random, oldest), External file | Must be pre-calculated and supplied | Full flexibility (algorithmic, random, optimization) |

| Learning Curve | Moderate (file preparation crucial) | Steep (license, syntax) | Very steep (requires coding & linear algebra expertise) |

| Cost | Free | Commercial license | Free (open-source libraries) |

Table 2: Typical Performance Metrics (Theoretical vs. Observed)

| Scenario (Animals/Genotypes) | Full G Inverse Time | APY Inversion Time (Core=10k) | Memory Reduction Factor |

|---|---|---|---|

| 100,000 / 50k SNP | ~2 hours | ~15 minutes | ~5x |

| 1,000,000 / 50k SNP | Infeasible (>1 week) | ~3-5 hours | ~50x |

| 2,000,000 / 50k SNP | Not possible on HPC | ~8-12 hours | ~100x |

Note: Performance is hardware-dependent. Metrics assume efficient core selection and optimized software settings.

Experimental Protocols

Protocol 3.1: Implementing APY in the BLUPF90 Suite

Objective: To perform a single-step GBLUP evaluation using the APY approximation for a population exceeding 500,000 genotyped animals.

Materials: Genotype files (e.g., PLINK .bed), pedigree file, phenotype file, parameter file.

Procedure:

- Core Selection: Create a text file (

core_list.txt) containing the IDs of the core animals (e.g., 10,000 animals). Selection can be based on algorithms (e.g., maximizing genetic diversity) or pragmatic rules (oldest, most connected). - Prepare Genomic Relationship Matrix: Use

preGSf90to create the sparse APY inverse directly.

Run preGSf90: Execute the program to set up the mixed model equations with the APY inverse.

Run Iterative Solver: Use

blupf90+ormcgblup90to solve the equations and obtain genomic estimated breeding values (GEBVs).Output: GEBVs for all animals are written to files such as

solutions.

Validation: Compare accuracies and predictive ability with a smaller subset using the full G inverse, or perform cross-validation.

Protocol 3.2: Implementing APY in ASReml-R

Objective: To fit a single-trait GBLUP model using the APY approximation in ASReml for a dataset of ~200,000 genotypes.

Materials: R environment with ASReml-R licensed, data frames for phenotypes, pedigree, and genomic relationships in core/non-core partition.

Procedure:

- Partition Data and Compute Matrices: In R, compute Gcc, Gcn, and diagonal blocks Gnn.

Construct the APY Inverse Directly: Assemble the sparse inverse matrix using the formula: Gapy^-1 = [ [Gcc^-1 + M D^-1 M^T, -M D^-1]; [-D^-1 M^T, D^-1] ], where D = diag(Gnn - Gnc Gcc^-1 Gcn).

Fit ASReml Model: Supply the sparse inverse matrix using

ginverse().

Protocol 3.3: Custom Implementation of APY in Python

Objective: To prototype and benchmark the APY algorithm from first principles for a synthetic dataset.

Materials: Python 3.8+ with NumPy, SciPy, and pandas libraries.

Procedure:

- Generate/Import Synthetic Data: Create a synthetic genotype matrix.

Core Selection Function: Implement a random or algorithmic selection.

Compute APY Inverse:

Integrate into Mixed Model: Set up and solve Henderson's Mixed Model Equations (HMME) using the constructed G_apy^-1.

Visualization of Workflows and Relationships

Title: APY-GBLUP Computational Workflow

Title: Matrix Transformation in APY Algorithm

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Research Reagent Solutions for APY Implementation Studies

| Item / Solution | Function in APY Research | Example / Specification |

|---|---|---|

| Genotype Datasets | Raw input for constructing G. Requires high-density SNP panels. | Illumina BovineHD (777k), PorcineGGP50K, Synthetic datasets for scaling tests. |

| Phenotype & Pedigree Databases | Essential for fitting the mixed model and validating accuracy. | National evaluation data (e.g., CDCB, Interbull), experimental field trial data. |

| High-Performance Computing (HPC) Cluster | Mandatory for runtime comparisons and large-scale analysis. | Configuration: 64+ cores, 512GB+ RAM, high-speed parallel filesystem. |

| Core Selection Algorithm Code | Determines the efficiency and accuracy of the APY approximation. | Custom R/Python scripts for Algorithm A (iterative peeling), B (random), C (connected). |

| Benchmarking Software | Provides gold-standard results for comparing APY accuracy. | BLUPF90 with full G (for smaller N), or proven published results from literature. |

| Linear Algebra Libraries | Backbone for custom script development and matrix operations. | R: Matrix, rrBLUP; Python: NumPy, SciPy.sparse, CuPy (for GPU). |

| Profiling & Monitoring Tools | To measure computational cost (time, memory) for each implementation step. | Linux time, vtune, R profvis, Python cProfile, cluster job logs. |

| Visualization Packages | For creating graphs of accuracy vs. core size, computational scaling plots. | R: ggplot2, plotly; Python: matplotlib, seaborn. |

Optimizing APY Performance: Solving Core Selection, Scaling, and Accuracy Issues

Within the broader thesis research on the APY (Algorithm for Proven and Young) algorithm for large-scale Genomic Best Linear Unbiased Prediction (GBLUP) implementation, the selection of an optimal core subset is a critical computational bottleneck. The APY algorithm approximates the genomic relationship matrix (G) by partitioning animals into core and non-core groups, enabling the inversion of G at a reduced computational cost. The accuracy and efficiency of this approximation hinge on the strategy used to select the core subset. This application note details and protocols three primary strategies: Random, Genetic Diversity-based, and Algorithmic selection, providing a framework for researchers to implement and evaluate these methods in genomic prediction and drug development contexts.

The following table summarizes the key characteristics, advantages, and quantitative performance metrics associated with each core selection strategy, as evidenced by recent studies in livestock and plant genomics.

Table 1: Comparative Analysis of Core Subset Selection Strategies

| Strategy | Primary Objective | Typical Workflow | Reported Computational Savings (vs. Full GBLUP) | Reported Predictive Accuracy (r²) | Key Advantage | Major Limitation |

|---|---|---|---|---|---|---|

| Random Selection | Baseline comparison; simplicity. | Random sampling without replacement from the full population. | ~90-95% | 0.75 - 0.85 (High LD populations) | Extremely fast; trivial to implement. | Unstable and suboptimal; highly variable accuracy. |

| Genetic Diversity (e.g., K-means clustering) | Maximize genetic representativeness. | 1. Perform PCA on genomic data. 2. Cluster individuals (e.g., K-means) in PC space. 3. Select centroids or random from clusters. | ~85-92% | 0.82 - 0.90 | Biologically informed; stable and robust accuracy. | Requires eigen-decomposition/PCA, which is O(n³) for large n. |

| Algorithmic (e.g., Mean of Covariance, Optimization) | Optimize a specific statistical criterion (e.g., trace of matrix). | Iterative algorithm to maximize the trace of the submatrix covariance or minimize the prediction error variance. | ~80-90% | 0.85 - 0.93 | Theoretically optimal for the chosen criterion. | Computationally intensive selection step; may overfit. |

Detailed Experimental Protocols

Protocol 3.1: Random Core Selection

Purpose: To establish a baseline for computational performance and prediction accuracy. Materials: Genotype matrix (X) of dimension n x m (n individuals, m markers). Procedure:

- Determine the desired core subset size (c). Empirical studies suggest c = 5,000 - 10,000 can effectively approximate G for n > 100,000.

- Generate a vector of n unique random indices using a reproducible pseudo-random number generator (seed = [VALUE] for reproducibility).

- Select the first c indices from the randomized list.

- Partition the genotype matrix X into Xcore (dimension *c* x *m*) and Xnon-core (dimension (n-c) x m).

- Proceed with APY-GBLUP implementation using this partition.

Protocol 3.2: Genetic Diversity-Based Selection via K-means Clustering

Purpose: To select a core subset that maximizes the genetic representation of the population. Materials: Genotype matrix (X); Software for PCA (e.g., PLINK, GCTA) and clustering (R, Python). Procedure:

- Quality Control & Pruning: Filter X for minor allele frequency (MAF > 0.01) and prune for linkage disequilibrium (LD r² < 0.5 within 50-SNP windows). This yields X_pruned.

- Principal Component Analysis (PCA): Compute the first k principal components (PCs) from X_pruned. Typically, k = 50-100 is sufficient to capture population structure.

- Clustering: Apply the K-means clustering algorithm on the n x k matrix of PC scores. Set the number of clusters (K) equal to the desired core size (c).

- Core Selection: For each of the c clusters, select the individual closest to the cluster centroid (or one at random from the cluster) to form the core subset.

- Partitioning: Map the selected individuals back to the original, full-density genotype matrix X to create Xcore and Xnon-core.

Protocol 3.3: Algorithmic Selection via Mean of Covariance (Mc)

Purpose: To algorithmically select a core subset that maximizes the trace of the genomic relationship submatrix. Materials: Genomic relationship matrix G (or its approximation). Procedure:

- Compute the full G matrix using the method of VanRaden (2008): G = ZZ' / (2∑pi(1-pi)), where Z is the centered genotype matrix.

- Initialize an empty core list and a candidate list containing all n individuals.

- Iterative Selection: a. For each candidate j, calculate the mean covariance (Mc) between candidate j and the current core: Mc(j) = mean(G(j, core)). b. Select the candidate with the minimum Mc(j) value. This individual is genetically most dissimilar to the current average core. c. Add this selected individual to the core list and remove it from the candidate list.