Navigating the Gray Zone: Strategies for Managing Low-Coverage Regions in Whole Exome Sequencing and Their Impact on VUS Interpretation

This article provides a comprehensive guide for researchers and clinical scientists on the critical challenge of low-coverage regions in Whole Exome Sequencing (WES) and their profound impact on the accurate...

Navigating the Gray Zone: Strategies for Managing Low-Coverage Regions in Whole Exome Sequencing and Their Impact on VUS Interpretation

Abstract

This article provides a comprehensive guide for researchers and clinical scientists on the critical challenge of low-coverage regions in Whole Exome Sequencing (WES) and their profound impact on the accurate calling and interpretation of Variants of Uncertain Significance (VUS). We explore the foundational causes of coverage gaps, from probe design limitations to genomic complexity. The article details current methodological approaches for mitigation, including wet-lab optimization and sophisticated bioinformatic imputation tools. We offer practical troubleshooting frameworks for assay optimization and data analysis. Finally, we present validation strategies and comparative analyses of emerging technologies, such as long-read and genome sequencing, to resolve these ambiguous regions. This guide aims to equip professionals with the knowledge to improve variant classification accuracy, enhance research reproducibility, and inform robust drug development pipelines.

Understanding the Blind Spots: Why Low-Coverage Regions Plague WES and Obscure VUS Calling

Technical Support Center: Troubleshooting Low Coverage & VUS Ambiguity

FAQs & Troubleshooting Guides

Q1: My Whole Exome Sequencing (WES) data shows a high number of Variants of Uncertain Significance (VUS) in genes of interest. How do I determine if this is due to insufficient coverage?

A: First, generate a per-base coverage report for your target regions. Use tools like bedtools coverage or GATK DepthOfCoverage. A high VUS count coupled with many regions below 20-30x coverage strongly suggests a coverage gap issue. Check if the VUS calls are specifically clustered in exons with median coverage below your validated threshold (often 20x). If so, these VUS are technically ambiguous and require follow-up confirmation.

Q2: What are the primary technical causes of low-coverage regions in standard WES? A: The main causes are:

- High GC-content Regions: Library preparation and hybridization capture are less efficient for sequences with very high or very low GC content.

- Pseudogenes/Homologous Sequences: Probes may bind non-specifically, or reads may map ambiguously, leading to coverage dropouts.

- Poor Probe Design: Inefficient capture baits for certain exonic regions.

- Suboptimal Input DNA Quality/Quantity: Degraded or low-input DNA leads to uneven capture.

Q3: What specific follow-up experiment should I prioritize to validate a VUS found in a region with 15x coverage? A: Sanger sequencing is the gold standard for orthogonal validation. Design primers flanking the VUS location. This confirms the variant's presence and corrects for potential alignment or calling artifacts from low-coverage NGS data.

Q4: How can I improve coverage for a critical gene panel in my future WES studies? A: Consider supplemental targeted hybridization. You can add custom probes for exons consistently undercovered in your assay to your existing kit. Alternatively, for a small set of genes, move to a targeted NGS panel, which typically delivers much higher, more uniform coverage.

Q5: What bioinformatic filter can I apply to flag low-reliability VUS calls from my pipeline? A: Implement a hard filter based on depth of coverage (DP) and variant allele frequency (VAF) confidence intervals. A VUS with DP<20 and a VAF near 50% (heterozygous) is less reliable than one with DP>50. Use binomial confidence intervals to estimate the uncertainty around the VAF.

Quantitative Data: Coverage & VUS Ambiguity

Table 1: Impact of Minimum Coverage Threshold on VUS Classification Reliability

| Coverage Threshold (x) | % of Target Exons Covered | Mean VUS Calls per Sample | % of VUS Calls in Low-Coverage Regions | Recommended Action |

|---|---|---|---|---|

| ≥ 10 | 99.5% | 145 | 35% | Insufficient for reliable calling. Confirm all findings. |

| ≥ 20 | 97.8% | 132 | 12% | Standard minimum for variant calling. |

| ≥ 30 | 95.1% | 129 | 5% | Good confidence for heterozygous calls. |

| ≥ 50 | 90.3% | 127 | 2% | High confidence for somatic/low-VAF detection. |

Table 2: Common WES Kit Coverage Performance in Challenging Regions

| Genomic Challenge | Typical Coverage Drop (vs. Panel Mean) | Associated Increase in VUS Ambiguity Risk |

|---|---|---|

| GC Content >65% or <35% | 40-60% | High |

| Segmental Duplications | 50-70% | Very High |

| First/Last Exons (UTR-proximal) | 30-50% | Moderate |

| High-Identity Pseudogenes (e.g., PCA3 vs. PCA2) | 70-90% | Very High |

Experimental Protocols

Protocol 1: Identifying and Validating VUS in Low-Coverage Regions

Objective: To confirm or refute the presence of a VUS called in a region with suboptimal NGS coverage (<20x). Materials: PCR primers, DNA polymerase, PCR purification kit, Sanger sequencing reagents. Methodology:

- Primer Design: Design primers 150-250bp upstream/downstream of the VUS. Ensure amplicon is 400-500bp. Check specificity via BLAST.

- PCR Amplification: Perform standard PCR using 20-50ng of the original genomic DNA.

- Amplicon Purification: Clean PCR product using a spin column or enzymatic cleanup.

- Sanger Sequencing: Submit purified amplicon for bidirectional Sanger sequencing.

- Analysis: Align Sanger chromatograms to the reference sequence using software like Mutation Surveyor or manually via IGV. Confirm the base at the variant position.

Protocol 2: Assessing Uniformity of Coverage in WES Data

Objective: To quantify coverage gaps and identify systematically undercovered genes/exons.

Materials: Processed BAM files, target BED file (exome capture regions), bedtools or GATK.

Methodology:

- Calculate Coverage: Run

bedtools coverage -a <targets.bed> -b <sample.bam> -histon your aligned BAM file. - Summarize Data: Parse the output to compute the mean, median, and percentage of bases covered at ≥20x, ≥30x, ≥50x per target, per gene, and per sample.

- Identify Gaps: Flag any exon with a median coverage below your pre-defined quality threshold (e.g., 20x).

- Cross-reference: Create a list of all VUS calls that fall within the coordinates of flagged low-coverage exons.

Visualizations

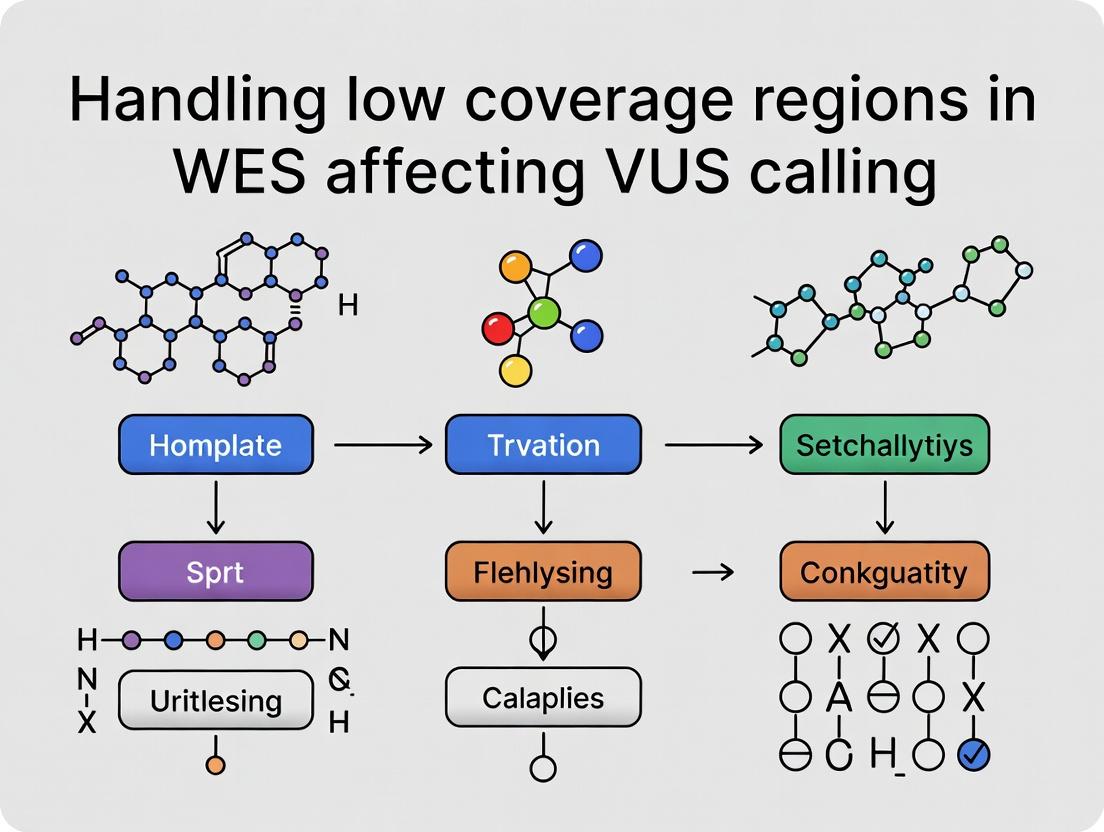

Title: Workflow: Identifying Coverage-Dependent Ambiguous VUS

Title: Root Causes of WES Coverage Gaps

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function & Application in Low-Coverage/VUS Studies |

|---|---|

| Custom Hybridization Capture Probes | Supplement standard exome kits with probes for known, persistently undercovered exons to boost on-target coverage. |

| Long-Range PCR Kits | Amplify large genomic segments containing multiple low-coverage exons for re-sequencing, helping resolve complex regions. |

| Sanger Sequencing Reagents | The essential orthogonal method for validating any VUS called from data with coverage below lab quality thresholds. |

| Degraded DNA Repair Enzymes | Pre-capture treatment of FFPE or low-quality DNA to improve library complexity and coverage uniformity. |

| GC Bias Mitigation Kits | Specialized library prep reagents (e.g., polymerases, buffers) designed to normalize amplification across varying GC content. |

| Molecular Barcodes (UMIs) | Unique molecular identifiers allow bioinformatic correction of PCR duplicates, improving accuracy of low-coverage variant calls. |

Technical Support Center

Troubleshooting Guide: Issues in Whole Exome Sequencing (WES) and VUS Calling

Problem: Poor or uneven coverage in WES leading to ambiguous Variant of Uncertain Significance (VUS) calls. Root Cause Investigation: This guide helps diagnose the three primary technical root causes.

FAQs & Solutions

Q1: How can I determine if my VUS call is an artifact from a probe design flaw? A: Probe design flaws often manifest as systematic, recurrent low-coverage or zero-coverage in specific exons across multiple samples.

- Diagnostic Check: Visualize coverage per exon in your cohort. Consistent drops at the same genomic coordinates indicate a design issue.

- Solution:

- Verify Probe Location: Use UCSC Genome Browser or Ensembl to check if the problematic probe(s) span:

- Splice Regions: May not capture canonical exons perfectly.

- Genomic Variants: Common SNPs in the probe-binding site can reduce hybridization efficiency.

- Segmental Duplications: Probes may map non-uniquely.

- Alternative Capture Kit: If possible, validate the finding using a different manufacturer's capture kit with a divergent probe set.

- Orthogonal Validation: Employ Sanger sequencing or amplicon-based NGS for the specific low-coverage region.

- Verify Probe Location: Use UCSC Genome Browser or Ensembl to check if the problematic probe(s) span:

Q2: My data shows extreme coverage dropouts in regions with >70% GC content. How can I mitigate this? A: High-GC regions cause inefficient hybridization and PCR amplification, leading to coverage voids.

- Diagnostic Check: Plot coverage against genomic GC percentage. Sharp declines are typical at very high GC.

- Mitigation Protocol:

- Wet-Lab Optimization:

- Use a polymerase master mix specifically formulated for high-GC content (e.g., containing additives like betaine or DMSO).

- Optimize the thermocycling protocol: implement a slow, gradual denaturation temperature ramp (e.g., 0.1°C/sec from 80°C to 95°C).

- Consider using a capture kit with longer incubation times for hybridization.

- Bioinformatic Compensation: Use bioinformatic tools (e.g.,

GATK GC Bias Correction) to normalize coverage based on GC content. Note: This corrects for bias but cannot create data from true dropouts.

- Wet-Lab Optimization:

Q3: I suspect a called variant is located within a pseudogene. How do I confirm this and ensure the call is real? A: Pseudogenes (processed or unprocessed) share high homology with functional genes, causing off-target capture and misalignment.

- Diagnostic Check: For a variant, check its mapping quality (MAPQ) and the presence of soft-clipped reads or numerous mismatches in the BAM file.

- Confirmation Protocol:

- LiftOver & BLAT: Lift the variant coordinates to alternative assemblies (e.g., alt-scaffolds) and use BLAT to identify highly homologous sequences elsewhere in the genome.

- Evaluate Read Pairs: Inspect if read pairs align discordantly (e.g., huge insert sizes) suggesting mapping to a distant pseudogene locus.

- Probe BLAST: BLAST the sequence of the capturing probe for the region. Hits to multiple genomic locations confirm pseudogene interference.

- Definitive Validation: Design PCR primers that are specific to the true gene of interest (often in intronic regions not conserved in processed pseudogenes) and sequence the product.

Table 1: Impact of Common Root Causes on WES Metrics

| Root Cause | Typical Reduction in Coverage Fold | Effect on MAPQ | VUS Artifact Risk |

|---|---|---|---|

| Poor Probe Design | 5-50x (can be to 0x) | Usually High (>50) | High (False Negatives) |

| High-GC Region (>70%) | 10-100x | High (>50) | High (False Negatives) |

| Pseudogene Interference | Variable (often normal) | Low (<30) | Very High (False Positives) |

Table 2: Recommended Solutions and Their Efficacy

| Solution | Applicable Root Cause | Estimated Improvement | Cost & Effort |

|---|---|---|---|

| Alternative Capture Kit | Probe Design Flaws | 80-95% resolution | High (Cost, New Lib Prep) |

| High-GC PCR Additives | High-GC Content | 3-10x coverage boost | Low (Reagent Cost) |

| Optimized Hybridization | High-GC, Probe Flaws | 2-5x coverage boost | Medium (Protocol Change) |

| Sanger Validation | All, especially Pseudogenes | Definitive answer | Medium per amplicon |

Experimental Protocols

Protocol 1: Diagnosing Probe Design Flaws via Inter-Kit Comparison

- Select 3-5 samples with unexplained low-coverage regions.

- Prepare new libraries from the same original DNA using a different commercially available WES capture kit (e.g., switch from Kit A to Kit B).

- Sequence on the same platform to similar mean depth.

- Use

mosdepthorGATK DepthOfCoverageto generate per-base coverage. - Compare per-exon coverage between the two kits. Regions recovering >50x coverage with the alternate kit are likely affected by a probe flaw in the first.

Protocol 2: Orthogonal Validation of Pseudogene-Associated Variants

- Primer Design: Using a tool like Primer3, design primers that bind to:

- The true gene's unique intronic sequence (confirmed by absence in BLAT search against hg38).

- Flank the putative variant by 100-200bp.

- PCR Optimization: Perform gradient PCR to establish specific annealing temperatures. Run products on a 2% agarose gel to confirm a single, specific band.

- Amplicon Sequencing: Purify the PCR product and prepare for Sanger or short-amplicon NGS sequencing.

- Analysis: Compare the sequence chromatogram/reads from the validation assay to the original WES call.

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function & Relevance |

|---|---|

| High-GC Enhancer Additives (e.g., Betaine, DMSO) | Disrupts secondary DNA structures, improves polymerase processivity in high-GC regions during library amplification. |

| PCR Polymerase for High-GC | Specialized enzymes (e.g., KAPA HiFi HotStart) maintain stability and fidelity in challenging templates. |

| Alternative Exome Capture Kit | Provides a different set of biotinylated probes, allowing differential hybridization to bypass design flaws. |

| Unique Genomic DNA (for QC) | Reference control samples (e.g., NA12878) with well-characterized coverage profiles help benchmark kit performance. |

| Blocking Oligos (e.g., Cot-1 DNA) | Pre-hybridization with repetitive sequence blockers can improve on-target specificity, marginally helping with pseudogene issues. |

Visualizations

Title: Root Causes of WES Coverage Gaps & VUS Artifacts

Title: Troubleshooting Workflow for VUS in Low-Coverage Regions

The Clinical and Research Impact of Unreported or Misclassified Variants in Critical Genes

Troubleshooting Guide & FAQs for Variant Analysis in Low-Coverage WES

Thesis Context: This support center addresses common technical challenges within the framework of research on "Handling low coverage regions in Whole Exome Sequencing (WES) affecting Variant of Uncertain Significance (VUS) calling."

FAQ Section

Q1: During VUS analysis in our WES data, we suspect variants are being missed in known disease-associated genes. What are the primary technical causes? A: The main causes are low sequencing depth (<20x) in critical exons, poor mapping quality in GC-rich or repetitive regions, and stringent variant calling filters that discard true positives. This leads to unreported variants. Misclassification often stems from relying on outdated or incomplete population frequency databases (e.g., gnomAD) and functional prediction algorithms that lack gene-specific calibrations.

Q2: How can we experimentally validate a suspected unreported variant in a low-coverage region? A: You must perform orthogonal validation. The standard protocol is:

- Primer Design: Design PCR primers flanking the target region using tools like Primer-BLAST, ensuring they are outside any problematic repeats.

- PCR Amplification: Amplify the target from original genomic DNA. Use a high-fidelity polymerase.

- Sanger Sequencing: Purify the PCR product and perform bidirectional Sanger sequencing.

- Analysis: Align sequences to the reference genome using software like SeqScape or Mutation Surveyor to confirm the variant's presence and zygosity.

Q3: Our pipeline classified a variant as a VUS, but clinical databases list it as pathogenic. How should we troubleshoot this misclassification? A: This indicates a discordance between your pipeline's annotation sources and clinical knowledge bases. Follow this checklist:

- Annotate with ClinVar: Re-annotate your VCF file using the latest version of ClinVar via annovar or Ensembl VEP.

- Review Classification Criteria: Manually review the variant against the latest ACMG/AMP guidelines. Use the InterVar tool for semi-automated assessment.

- Check for Technical Artifacts: Examine the BAM file. Rule out strand bias, low allele balance in heterozygous calls, or alignment errors near indels that may have led to conservative calling.

Q4: What are the best practices for improving variant calling in low-coverage regions for research purposes? A: Implement a tiered approach:

- Wet-lab: Consider target enrichment with a custom panel to boost coverage for genes of interest.

- Bioinformatics: Use multiple variant callers (e.g., GATK, FreeBayes) and take their intersection. Apply machine learning-based tools like DeepVariant which are more robust to low coverage. Manually inspect BAM files in IGV for high-priority, low-confidence calls.

Experimental Protocols

Protocol: Orthogonal Confirmation of Low-Coverage Variants via Sanger Sequencing Objective: To confirm the presence and genotype of a candidate variant identified in a WES low-coverage region. Materials: Original gDNA, PCR primers, high-fidelity PCR master mix, agarose gel electrophoresis system, PCR purification kit, Sanger sequencing service. Procedure:

- Target Identification: From the WES BAM, note the genomic coordinates (GRCh38) of the candidate variant.

- Primer Design: Design primers to yield a 300-500bp amplicon. Check for specificity.

- PCR Setup: Prepare 25 µL reactions. Use a touchdown PCR protocol if the region is GC-rich.

- Gel Electrophoresis: Run PCR product on a 1.5% agarose gel to confirm a single band of expected size.

- Purification: Purify the successful PCR product.

- Sequencing: Submit purified product for bidirectional sequencing.

- Data Analysis: Align sequences, visually confirm the variant, and document chromatogram quality.

Protocol: Manual Curation of a Misclassified Variant Using ACMG/AMP Guidelines Objective: To systematically reclassify a VUS using published standards. Materials: Variant details (gene, nucleotide change), access to databases: ClinVar, gnomAD, dbNSFP, Alamut Visual, literature sources. Procedure:

- Data Aggregation: Compile population frequency (PM2), computational prediction data (PP3/BP4), functional study evidence (PS3/BS3), segregation data (PP1), and de novo information (PS2).

- Criteria Application: Using the ACMG/AMP framework, assign strength levels (Very Strong, Strong, Supporting) to each applicable criterion.

- Evidence Combination: Combine criteria according to ACMG rules to reach a preliminary classification (e.g., Pathogenic, Likely Pathogenic, Benign).

- Documentation: Record all evidence sources and the logical path for final classification. Discrepancies with public databases must be justified.

Data Presentation

Table 1: Impact of Minimum Coverage Thresholds on Variant Detection in Critical Genes

| Coverage Threshold | % of Target Regions Covered | Estimated % of True Variants Missed | Common in Genes |

|---|---|---|---|

| ≥ 30x | ~95% | < 2% | Most genes |

| 20x - 30x | ~4% | 5-15% | TTN, NEB |

| 10x - 20x | ~0.8% | 20-40% | PKHD1, CLCN1 |

| < 10x (Low Cov.) | ~0.2% | > 60% | GC-rich exons of CFTR, BRCA1 |

Table 2: Common Sources of Variant Misclassification and Recommended Actions

| Source of Error | Typical Consequence | Recommended Corrective Action |

|---|---|---|

| Outdated Population DB | Over-classify as novel/VUS | Use latest gnomAD, 1000 Genomes |

| Inaccurate Prediction Algorithms | Misweight PP3/BP4 evidence | Use ensemble tools (REVEL, MetaLR) |

| Ignoring Functional Studies | Miss PS3/BS3 evidence | Systematic PubMed/ClinVar search |

| Pipeline Annotation Errors | Incorrect transcript assignment | Manually review in IGV/Ensembl |

Visualizations

Title: Workflow for Addressing Unreported & Misclassified Variants

Title: Impact Pathway of Low-Coverage Variant Issues

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Protocol |

|---|---|

| High-Fidelity DNA Polymerase (e.g., Q5) | Ensures accurate PCR amplification of target region from gDNA for validation. |

| PCR Primer Pairs | Specifically amplifies the low-coverage genomic region of interest for Sanger sequencing. |

| Agarose Gel Electrophoresis System | Verifies specificity and size of PCR amplicon before sequencing. |

| Sanger Sequencing Service | Provides gold-standard orthogonal confirmation of variant presence and zygosity. |

| Genomic DNA Purification Kit | Yields high-quality, intact gDNA from patient samples for both WES and validation. |

| Targeted Enrichment Panel (Custom) | Boosts coverage for genes of interest in subsequent experiments to mitigate low-coverage issues. |

| IGV (Integrative Genomics Viewer) | Open-source tool for visual manual inspection of BAM files to assess read alignment and variant support. |

| Alamut Visual or Similar | Commercial software for comprehensive variant annotation and ACMG guideline assessment. |

Troubleshooting Guides & FAQs

Q1: My Whole Exome Sequencing (WES) data has a mean coverage of 100x, but I am getting many low-confidence variant calls in my research. What are the key coverage metrics I should check beyond the mean?

A: Mean coverage can mask critical deficiencies. You must examine:

- Uniformity of Coverage: Calculate the percentage of target bases covered at 10x, 20x, and 30x. Poor uniformity indicates regions are being missed.

- Minimum Coverage Threshold: Establish a per-base coverage floor (e.g., 10x) below which you will not make a variant call. In research, this might be 5-10x; in clinical settings, it is often ≥20x.

- Duplicate Read Rate: High duplication (>20%) artificially inflates coverage estimates and reduces effective coverage.

- Transition/Transversion (Ti/Tv) Ratio: Deviations from the expected ratio (~2.8-3.0 for WES) can indicate systematic calling errors in low-coverage regions.

Protocol: To calculate coverage uniformity, use samtools depth on your final BAM file, then compute the proportion of target bases above your thresholds.

Q2: How do I handle a Variant of Uncertain Significance (VUS) that falls in a region with coverage just below my lab's minimum threshold in a research setting?

A: In a research context, you can employ a tiered verification protocol:

- Flag the VUS as "low-coverage" in your report.

- Perform in silico rescue: Re-align reads with a different aligner (e.g., switch from BWA-MEM to Bowtie2) and re-call variants in that specific region.

- Wet-lab confirmation: Design a PCR assay to specifically amplify the genomic region containing the VUS and perform Sanger sequencing. This is mandatory before any clinical application.

Protocol for in silico rescue:

Q3: For clinical variant reporting, what are the consensus minimum coverage thresholds, and how are they validated?

A: Clinical thresholds are stringent and validated via controlled experiments. The key is establishing a balance between sensitivity (detecting true variants) and specificity (avoiding false positives).

Table 1: Minimum Coverage Thresholds: Research vs. Clinical Settings

| Metric | Research Setting (Discovery) | Clinical Setting (Diagnostic) | Rationale |

|---|---|---|---|

| Mean Target Coverage | ≥ 80x - 100x | ≥ 100x - 150x | Ensures sufficient depth for heterozygous variant detection. |

| Minimum Per-Base Coverage | 5x - 10x | 20x (commonly required) | Reduces false positives; provides confidence in homozygous/heterozygous state. |

| % Target Bases ≥ 20x | > 90% | > 97% - 99% | Critical for clinical completeness; minimizes "no-call" regions. |

| Validation Method | Orthogonal method (e.g., Sanger) on a subset. | Orthogonal validation (e.g., Sanger) for all reportable variants. | Regulatory requirement (CLIA/CAP) to confirm calling accuracy. |

Experimental Protocol for Threshold Validation:

- Sample Preparation: Use a well-characterized reference sample (e.g., NA12878 from GIAB).

- Downsampling Experiment: Generate a series of BAM files with mean coverages from 10x to 200x using

samtools view -s. - Variant Calling: Call variants on each downsampled dataset using your standard pipeline.

- Benchmarking: Compare calls at each coverage level against the "truth set" for the reference sample. Calculate Sensitivity (True Positive Rate) and Precision (Positive Predictive Value) at each threshold.

- Define Threshold: Select the minimum coverage where sensitivity and precision for heterozygous variants both exceed 99% (clinical) or 95% (research).

Q4: What are the primary experimental and bioinformatic strategies to mitigate low-coverage regions in WES that impact VUS research?

A: A multi-faceted approach is required.

Table 2: Strategies for Handling Low-Coverage Regions

| Domain | Strategy | Function |

|---|---|---|

| Wet-Lab | Hybridization Capture Kit Optimization: Compare performance of different exome kits (e.g., IDT xGen, Agilent SureSelect) for your regions of interest. | Kits have different probe designs leading to coverage variability in GC-rich or repetitive regions. |

| Wet-Lab | PCR Duplicate Reduction: Use unique molecular identifiers (UMIs) during library prep. | Distinguishes true biological duplicates from PCR duplicates, improving effective coverage. |

| Bioinformatics | Joint Calling with gVCF: Process multiple samples together using a pipeline that outputs genomic VCFs (gVCFs). | Improves call confidence in low-coverage samples by leveraging population data. |

| Bioinformatics | Local De Novo Assembly: Use tools like SPAdes on unmapped or poorly mapped reads from a region. | Can reassemble difficult sequences missed by standard alignment. |

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Addressing Low Coverage |

|---|---|

| Unique Molecular Identifiers (UMIs) | Short random nucleotide tags ligated to each original DNA fragment before amplification. Allows bioinformatic removal of PCR duplicates, increasing effective coverage accuracy. |

| Complementary Hybridization Capture Kits | Using two different exome capture kits (e.g., one for core exons, one for splice regions) can "fill in" low-coverage areas specific to a single kit's design. |

| High-Fidelity DNA Polymerase (e.g., Q5, KAPA HiFi) | Reduces PCR errors during library amplification, which is critical when relying on few reads (low coverage) to call a variant. |

| Matched gDNA & RNA Samples | RNA-seq data from the same sample can provide evidence for expression of a VUS found in a low-coverage WES region, supporting its biological relevance. |

| Sanger Sequencing Primers | Custom primers designed to amplify and sequence a specific low-coverage region for orthogonal validation of any potential variant. |

Visualizations

Diagram 1: Workflow for Defining Coverage Thresholds

Diagram 2: Decision Path for a VUS in Low-Coverage Region

Bridging the Gaps: Proactive Wet-Lab and Bioinformatic Strategies to Enhance Coverage

Technical Support Center

Troubleshooting Guides & FAQs

Q1: During my WES for VUS research, I am getting inconsistent coverage in low-coverage regions (e.g., GC-rich exons) despite using a reputable kit. What are the primary kit-related factors to investigate?

A: Inconsistent coverage, especially in challenging regions, often stems from suboptimal probe design and library input. For VUS research, prioritize kits with:

- Probe Design Strategy: Kits employing tiled or overlapping probes for difficult regions perform better. Compare the number of probes per low-coverage target and the probe padding (base pairs beyond exon boundaries).

- Input DNA QC: Strictly adhere to recommended input mass and quality. Degraded or sheared DNA (>35% fragments <150bp) drastically reduces capture efficiency in already challenging areas.

- Recommended Action: Re-quantify DNA using fluorometry (Qubit) and fragment analyzer (Bioanalyzer/TapeStation). If quality is acceptable, consider a kit specifically optimized for challenging regions, often indicated by higher probe counts in GC-rich or homologous areas.

Q2: How do I optimize hybridization time and temperature to improve capture uniformity for my specific kit when targeting low-coverage regions?

A: Hybridization conditions are critical for balancing on-target rate and uniformity. Standard protocols often favor high on-target rates at the expense of uniformity.

- Problem: Shorter hybridization (16-24 hrs) can leave difficult regions under-captured.

- Optimization Protocol:

- Perform a pilot experiment with your selected kit and standard patient/library sample.

- Set up three identical capture reactions.

- Vary hybridization time: 24h (standard), 48h, and 72h.

- Keep temperature constant at the kit's recommended setting (typically 65°C).

- Post-capture, sequence all libraries to low depth (~5M reads) on a rapid platform.

- Analyze coverage uniformity (e.g., fold-80 penalty, % of target base at >20x) focusing on your known low-coverage regions.

- Expected Outcome: Extended hybridization often improves uniformity in difficult regions but may slightly decrease overall on-target rate. The optimal point maximizes uniformity for your regions of interest.

Q3: My capture efficiency (post-capture yield) is consistently below 10%. What steps should I take to diagnose the issue?

A: Low capture efficiency points to a failure during hybridization or capture. Follow this diagnostic workflow:

- Verify Pre-capture Library:

- Confirm library concentration and size distribution. Ensure adapters are compatible with your capture baits.

- Check for excessive adapter dimers (<5% of total yield), which compete for capture beads.

- Review Hybridization Components:

- Ensure the hybridization buffer is fresh and not subjected to freeze-thaw cycles.

- Verify the blocking agents (e.g., Cot-DNA, blockers for specific indexes) are added in the correct order and volume.

- Check Bead Handling:

- Streptavidin bead washing: Use fresh, room-temperature wash buffers. Do not over-dry beads.

- Mixing: Ensure vigorous shaking or vortexing during hybridization is used if recommended.

- Perform a Spike-in Control: Use a commercially available spike-in control (e.g., from a different species) to distinguish between hybridization failure and bead capture failure.

Table 1: Comparison of Major WES Kit Features for Low-Coverage Region Optimization

| Kit Feature / Metric | Kit A (Standard) | Kit B (Uniformity-Focused) | Kit C (Clinical-Grade) | Impact on Low-Coverage Regions |

|---|---|---|---|---|

| Avg. Probes per Target | 2 | 5 | 3 | Higher probe count improves capture in GC-rich/divergent sequences. |

| Probe Padding | 0 bp | +1 bp each side | +2 bp each side | Padding helps capture splice variants and near-exonic VUSs. |

| Hybridization Time (Std.) | 24 h | 72 h | 24 h | Longer hybridization can improve uniformity but increases workflow time. |

| Reported Fold-80 Penalty | <2.5 | <1.8 | <2.2 | Lower penalty indicates more uniform coverage, beneficial for VUS calling. |

| Input DNA Rec. (ng) | 50-200 | 100-500 | 50-250 | Higher input can improve coverage but requires quality DNA. |

| GC-Rich Performance | Moderate | High | Moderate-High | Directly affects VUS calling in difficult genomic segments. |

Table 2: Troubleshooting Capture Efficiency Metrics

| Symptom | Potential Cause | Diagnostic Step | Corrective Action |

|---|---|---|---|

| Capture Yield <10% | Degraded library, old buffers | Bioanalyzer trace; make fresh HB/BB | Reprep library; use fresh buffers. |

| High Duplication Rate | Insufficient input DNA | Calculate pre-capture library complexity | Increase input DNA within kit specs. |

| Low Coverage in GC >60% | Probe design limits; rapid re-annealing | Analyze coverage vs. GC correlation | Increase hybridization time; use a kit with enhanced GC probes. |

| High Off-Target Rate | Incomplete blocking | Check blocking agent volume/quality | Fresh blocking agents; optimize amount. |

Experimental Protocols

Protocol: Optimized Hybridization for Uniform Coverage

Objective: To enhance coverage uniformity in low-coverage regions for improved VUS assessment by extending hybridization time.

Materials: Prepared Illumina-compatible library, selected hybridization kit (e.g., Kit B from Table 1), thermal cycler with heated lid, magnetic rack, fresh buffers.

Method:

- Library Preparation: Shear 200 ng gDNA (Covaris). End-repair, A-tail, and ligate with dual-indexed adapters. Clean up with SPRI beads.

- Pre-capture PCR: Amplify library for 6-8 cycles. Clean up. Quantify by Qubit and Bioanalyzer. Pool multiple libraries if needed.

- Hybridization Setup:

- Combine 100 ng pooled library, 5 µl of provided blocking mix, and x µl of biotinylated probe baits per kit instructions.

- Dry down in a vacuum concentrator.

- Resuspend in hybridization buffer thoroughly.

- Hybridization: Denature at 95°C for 10 min in a thermal cycler. Immediately incubate at 65°C for 72 hours with the heated lid on.

- Capture: Add streptavidin beads, incubate at 65°C for 45 min with intermittent mixing.

- Washing: Perform two stringent washes at 65°C for 10 min each, followed by two room-temperature washes.

- Post-capture PCR: Elute DNA from beads. Amplify for 10-12 cycles. Clean up.

- QC: Assess yield (Qubit), size distribution (Bioanalyzer), and capture efficiency (qPCR against a non-target genomic region if possible).

Protocol: Diagnostic Check for Capture Failure

Objective: Systematically identify the step causing low capture efficiency.

Method:

- Pre-capture Library QC: Run 1 µl of library on a High Sensitivity Bioanalyzer chip. The peak should be ~300-350 bp with minimal adapter dimer (~100bp).

- Spike-in Control Hybridization: Repeat the capture protocol using a spike-in control DNA (e.g., 1% PhiX or Salmonella genome) with known capture characteristics.

- Post-capture Analysis:

- If both sample and spike-in show low capture, the issue is with the capture beads or wash buffers (likely bad bead batch or incorrect buffer conditions).

- If sample capture is low but spike-in is normal, the issue is with the sample library compatibility with the probes (likely poor library quality or incorrect blocking).

- Buffer Check: Prepare fresh Wash Buffers 1 & 2 and repeat a standard capture.

Visualizations

Diagram 1: WES Workflow & Optimization Loop

Diagram 2: Capture Efficiency Troubleshooting

The Scientist's Toolkit

Table 3: Key Research Reagent Solutions for WES Optimization

| Item | Function in Optimization | Key Consideration for Low Coverage/VUS |

|---|---|---|

| High-Sensitivity DNA Assay (e.g., Qubit dsDNA HS) | Accurate quantification of low-input DNA and final libraries. | Critical for adhering to optimal input mass, directly impacting complexity and uniformity. |

| Fragment Analyzer / Bioanalyzer | Assesses DNA fragmentation and library size distribution. | Identifies adapter dimer contamination and ensures ideal insert size for efficient capture. |

| SPRI Selection Beads | Size-selective cleanup of libraries post-reaction. | Precise bead-to-sample ratios are vital to maintain diverse library populations. |

| Hybridization & Capture Kit | Contains baits, buffer, blockers, and beads for target enrichment. | Select based on probe design (tiling, padding) and hybridization conditions suited for difficult regions. |

| PCR Enzyme (High-Fidelity) | Amplifies pre- and post-capture libraries. | Minimizes PCR artifacts and duplicates, preserving true variant representation. |

| Indexed Adapters | Allows multiplexing of samples. | Ensure compatibility with chosen capture kit's blocking oligos to prevent index cross-capture. |

| Cot-1 / Blocking DNA | Blocks repetitive genomic sequences during hybridization. | Fresh, high-quality blocks reduce off-target capture, freeing resources for on-target. |

| Spike-in Control DNA (e.g., from another species) | Process control for capture efficiency. | Helps isolate capture failures to kit vs. sample-specific issues. |

Leveraging Supplemental Panels for Disease-Specific or Hard-to-Capture Genomic Regions

Technical Support Center: Troubleshooting & FAQs

Q1: After implementing a supplemental panel, my coverage in the target region remains uneven. What are the primary causes and solutions? A: Uneven coverage is often due to probe design issues or GC-content bias.

- Cause 1: Suboptimal probe hybridization efficiency for high-GC (>65%) or low-GC (<35%) regions.

- Solution: Use a panel that incorporates mixed-base probes (e.g., inosine) for high-GC regions and ensure hybridization buffer includes appropriate enhancers (e.g., betaine).

- Cause 2: PCR duplicates from low input DNA inflating read counts in some areas while masking true low coverage.

- Solution: Use unique molecular identifiers (UMIs) during library prep to enable accurate duplicate marking and correction. Increase input DNA mass if possible.

- Protocol for GC-Bias Assessment:

- Calculate the mean coverage for each 100-bp bin across your target region.

- Plot mean coverage against the %GC content of each bin.

- If a strong correlation (|r| > 0.4) is observed, consider re-optimizing the hybridization temperature or using a polymerase master mix designed for GC-rich templates.

Q2: How do I validate that my custom supplemental panel is accurately capturing all intended variants, especially structural variants (SVs)? A: Validation requires a orthogonal method and well-characterized control samples.

- Protocol for Panel Validation:

- Sample Selection: Use a reference sample (e.g., NA12878) or a cell line with known truth sets for SNVs, indels, and SVs in your region of interest.

- Orthogonal Testing: Run the same sample using long-read sequencing (PacBio or Oxford Nanopore) or digital droplet PCR (ddPCR) for specific variants.

- Data Comparison: Compare the variant calls from your panel data (post-analysis pipeline) with the orthogonal truth set.

- Calculate Metrics: Compute sensitivity (recall) and precision for each variant type.

Table 1: Example Validation Metrics for a Custom Cardiomyopathy Panel

| Variant Type | Number of Known Variants | True Positives | False Negatives | False Positives | Sensitivity | Precision |

|---|---|---|---|---|---|---|

| SNV | 150 | 148 | 2 | 1 | 98.7% | 99.3% |

| Indel (1-20bp) | 45 | 42 | 3 | 2 | 93.3% | 95.5% |

| Exonic Deletion | 8 | 7 | 1 | 0 | 87.5% | 100% |

Q3: When integrating WES and panel data, my pipeline fails to merge BAM files effectively. What is the recommended workflow? A: Sequential analysis followed by variant-level integration is preferred over BAM merging.

- Recommended Workflow:

- Process Independently: Align and process WES data and supplemental panel data through variant calling (gVCF generation) using the same reference genome and base quality score recalibration (BQSR) settings, but separately.

- Joint Genotyping: Perform joint genotyping on the combined set of gVCFs from both the WES and panel runs using a tool like GATK GenotypeGVCFs.

- Filter & Annotate: Apply variant quality score recalibration (VQSR) or hard filters, then annotate the combined VCF. This method prevents alignment artifacts from different capture chemistries from interfering with each other.

Workflow for Integrating WES and Panel Data

Q4: What is the optimal strategy for prioritizing Variants of Uncertain Significance (VUS) found only in low-coverage WES regions that are rescued by panel sequencing? A: Prioritization should be based on coverage confidence and functional prediction.

- Confirm Rescue: Ensure the variant in the panel data has >20x coverage and >90% of reads supporting the alternate allele.

- Functional Assay Correlation: Prioritize VUS in genes where functional pathways are well-characterized and can be tested.

- Phenotypic Match: Use tools like Exomiser or Phenolyzer to score variants based on the patient's clinical Human Phenotype Ontology (HPO) terms.

- Segregation Analysis: If familial samples are available, test cosegregation with disease phenotype.

Pathway for VUS Prioritization Post-Rescue

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Supplemental Panel Workflow |

|---|---|

| Hybridization Capture Probes (xGen or SureSelect) | Biotinylated oligonucleotides designed to tile across hard-to-capture regions (e.g., high-GC promoters, paralogous sequences). |

| UMI Adapter Kits (IDT or Twist) | Adapters containing random molecular barcodes to tag original DNA molecules, enabling accurate PCR duplicate removal and improved variant allele frequency calculation. |

| GC-Bias Removal Reagents (KAPA HiFi or Q5) | Polymerase master mixes with specialized buffers to mitigate coverage dropouts in extreme GC regions. |

| Positive Control DNA (Seraseq or Horizon) | Synthetic or cell line-derived reference materials with known variant profiles in target regions, for panel performance validation. |

| Methylated Blockers (IDT or Roche) | oligonucleotides that block repetitive elements (e.g., Alu, LINE) to improve on-target efficiency and coverage uniformity. |

| Post-Capture PCR Beads (SPRIselect) | Size-selection beads for clean-up and removal of excess primers and adapters post-enrichment, minimizing off-target sequencing. |

Technical Support Center

Troubleshooting Guides & FAQs

Q1: After imputation with BEAGLE on my low-coverage WES data, my VCF file shows an unexpected, dramatic increase in variant calls, many of which are in previously low-coverage regions. Is this normal, and how do I assess accuracy?

- A: This is a common observation. Imputation statistically infers missing genotypes based on reference haplotypes, generating many calls. To assess accuracy:

- Calculate Concordance: If you have any high-coverage truth data (e.g., from a well-sequenced sample or a validated SNP array), calculate genotype concordance rates specifically in the imputed regions.

- Use Imputation Quality Metrics: Examine the imputation quality score (typically

DR2in BEAGLE orINFOscore in Minimac). Filter variants with a score < 0.7-0.8 as a first pass. - Experimental Validation: For critical VUS in your research, prioritize Sanger sequencing of a subset of imputed variants to establish a validation rate for your specific dataset.

Q2: When running GLIMPSE for genotype imputation, the job fails with an error about "malformed VCF" or "contig not found." What are the most likely causes?

- A: This is almost always a VCF header or formatting issue. Follow this protocol:

- Check & Harmonize Reference Genome: Ensure your target WES data and the reference panel VCFs were aligned to the exact same reference genome build (e.g., GRCh37 vs. GRCh38). Inconsistencies cause failure.

- Validate and Index Files: Re-index your input VCFs (

tabix) and ensure they are bgzipped. Usebcftools viewto check for formatting errors. - Header Contig Lines: The

##contiglines in your WES VCF header must match the chromosome naming in the reference panel (e.g., "chr1" vs. "1"). Usebcftools reheaderto correct.

Q3: Local reassembly with GATK's HaplotypeCaller on a low-coverage region produces no calls or an error about "no active region." What steps should I take?

- A: This indicates the coverage is too low for the assembler to confidently build haplotypes.

- Adjust Sensitivity: Lower the

-stand_call_confthreshold (e.g., from default 30 to 20) and use the--dont-use-soft-clipped-basesand--allow-non-unique-kmers-in-refflags to increase sensitivity in difficult regions. - Force Calling: Use

--genotyping-mode DISCOVERYand--alleles(with a known variant file) to force output at specific genomic positions of interest for your VUS research. - Merge Evidence: Consider combining multiple low-coverage samples from the same individual (if available) or using panel data to boost local evidence before reassembly.

- Adjust Sensitivity: Lower the

Q4: How do I choose between a population-based imputation tool (like Minimac) and a local reassembly tool (like GATK HaplotypeCaller) for a given low-coverage WES target region?

- A: The decision is based on available data and region context. See the decision table below.

Decision Table: Tool Selection for Low-Coverage Rescue

| Criterion | Population-Based Imputation (e.g., BEAGLE, Minimac) | Local Reassembly (e.g., GATK HaplotypeCaller, Plato) |

|---|---|---|

| Primary Requirement | A large, population-matched reference haplotype panel (e.g., 1000G, gnomAD, TOPMed). | Sufficient local read depth (>6-8x) for assembly, even if uneven. |

| Best For | Filling in widespread, common low-coverage gaps, especially for SNP calling. | Resolving complex variants (indels, MNVs) in targeted, difficult-to-map regions. |

| Key Limitation | Poor performance for rare or population-specific variants not in the panel. | Fails in regions with extremely low or zero coverage. |

| Typical Output | Statistical genotype probabilities for all variants in the panel. | A curated set of variant calls from the locally reassembled haplotypes. |

Detailed Experimental Protocols

Protocol 1: Genotype Imputation using GLIMPSE on Low-Coverage WES Data

Objective: To impute missing genotypes in low-coverage (<20x) WES regions using a reference haplotype panel.

- Data Preparation:

- Input: Low-coverage WES data in VCF format, phased reference panel VCF (e.g., 1000 Genomes Phase 3).

- Preprocessing: Ensure chromosomal notation matches. Split the WES VCF by chromosome (

bcftools view -r chr1 input.vcf.gz -Oz -o chr1.vcf.gz). - Indexing: Index all VCFs with

tabix -p vcf filename.vcf.gz.

- Chunking the Genome:

- Use

GLIMPSE_chunkto split the chromosome into manageable, non-overlapping chunks (e.g., 2000kb), accounting for buffer regions.

- Use

- Running Imputation:

- Execute

GLIMPSE_phaseon each chunk to phase and impute genotypes. Command template:

- Execute

- Ligation:

- Use

GLIMPSE_ligateto stitch the imputed chunk VCFs into a single chromosome VCF.

- Use

- Post-processing:

- Use

bcftoolsto filter variants based on INFO score (e.g.,-i 'INFO/SCORE>0.7') and merge chromosomes back into a genome-wide VCF.

- Use

Protocol 2: Local De Novo Assembly with GATK HaplotypeCaller

Objective: To perform de novo local reassembly in a low-coverage genomic interval to call variants missed by standard calling.

- Target Interval Definition:

- Create a BED file (

target_interval.bed) specifying the low-coverage region or the specific gene locus containing the VUS.

- Create a BED file (

- Force Local Assembly:

- Run HaplotypeCaller in GVCF mode, restricting to the interval and lowering confidence thresholds.

- Run HaplotypeCaller in GVCF mode, restricting to the interval and lowering confidence thresholds.

- Genotype GVCFs: (If multiple samples)

- Use

GenotypeGVCFson the resulting GVCFs to produce a final multisample VCF for the region.

- Use

- Variant Filtering & Annotation:

- Apply variant quality score recalibration (VQSR) or hard-filters appropriate for low-coverage data. Annotate using resources like ClinVar and dbNSFP.

Mandatory Visualizations

Diagram 1: Tool Selection Workflow for Low-Coverage Rescue

Diagram 2: Imputation & Reassembly in VUS Research Pipeline

The Scientist's Toolkit: Research Reagent Solutions

| Tool / Reagent | Function in Low-Coverage Rescue | Example/Note |

|---|---|---|

| Reference Haplotype Panel | Provides the population genetic data required for statistical imputation of missing genotypes. | TOPMed Freeze 8: Large, diverse panel ideal for imputation in non-European cohorts. |

| Genetic Map | Models recombination rates, crucial for accurate phasing during imputation. | HapMap Phase II b37: Standard map used with many imputation servers. |

| High-Coverage WGS/Gold-Standard Data | Serves as a truth set for validating imputation accuracy and benchmarking rescue tools. | Genome in a Bottle (GIAB) benchmarks: Provide high-confidence call sets for major reference samples. |

| Variant Annotation Databases | Functional annotation of rescued variants is critical for VUS interpretation post-rescue. | dbNSFP, ClinVar, gnomAD: Provide pathogenicity predictions, population frequency, and clinical significance. |

| In Silico PCR / Primer Design Tool | Essential for designing assays to experimentally validate rescued VUS calls via Sanger sequencing. | Primer3, UCSC In-Silico PCR: Design primers specific to the previously low-coverage region. |

Best Practices for Pipeline Configuration to Maximize Sensitivity in Suboptimal Regions

Introduction Within the context of research focused on handling low coverage regions in Whole Exome Sequencing (WES) and its impact on Variant of Uncertain Significance (VUS) calling, configuring analysis pipelines for maximum sensitivity in suboptimal regions is critical. Suboptimal regions—characterized by low coverage, high GC content, or mapping ambiguity—hinder variant detection, directly affecting the interpretability of VUS. This technical support center provides targeted guidance for researchers, scientists, and drug development professionals to troubleshoot and optimize their bioinformatics workflows.

Troubleshooting Guides & FAQs

FAQ 1: Why does my pipeline fail to call any variants in known difficult-to-map regions (e.g., homologous sequences), despite adequate overall coverage?

Answer: This is typically due to stringent mapping quality (MAPQ) and base quality (BQ) filters applied during the variant calling step. Standard filters (e.g., MAPQ < 30) may discard all reads aligning to paralogous regions. To recover signal:

- Action: Implement a tiered filtering approach. Create a BED file of known low-complexity and homologous regions (e.g., from the UCSC Genome Browser). Rerun variant calling, applying standard filters genome-wide but relaxed filters (e.g., MAPQ > 10, BQ > 15) specifically within the defined suboptimal regions.

- Protocol:

- Generate a BED file of suboptimal regions using resources like the ENCODE blacklist, DAC blacklist, and UCSC tables for segmental duplications.

- Use

bcftools mpileupwith the--regions-fileand--min-MQ/--min-BQflags for the relaxed pass, and again with standard flags for the whole genome. - Merge the two resulting VCFs using

bcftools merge, giving priority to variants called under standard filters. - Annotate the origin of each variant call to track its filtering tier.

FAQ 2: How can I improve sensitivity for low-frequency variants in regions of low coverage without drastically increasing false positives?

Answer: Balancing sensitivity and specificity requires optimizing the variant caller's model and leveraging duplicate reads cautiously.

- Action: Adjust the variant caller's prior expectations and use duplicate read information strategically. For GATK HaplotypeCaller, modify the

--min-base-quality-scoreand--minimum-mapping-qualityfor targeted regions. Consider usingfgbio’s tools to work with Unique Molecular Identifiers (UMIs) to accurately label and retain PCR duplicates that convey independent sampling of a molecule. - Protocol for UMI-based Deduplication:

- Tag UMI Sequences: Use

fgbio ExtractUmisFromBamto extract UMIs from read headers and add them as tags. - Group Reads: Use

fgbio GroupReadsByUmito group reads by their UMIs and mapping coordinates. - Call Consensus: Use

fgbio CallMolecularConsensusReadsto create a consensus read for each UMI group, correcting errors. - Filter Consensus: Use

fgbio FilterConsensusReadsto remove low-quality consensus reads. - Realign & Call Variants: Realign the consensus BAM file and proceed with a variant caller (e.g., GATK), adjusting sensitivity parameters as needed.

- Tag UMI Sequences: Use

FAQ 3: What are the best practices for configuring multiple variant callers to maximize sensitivity for VUS in suboptimal regions?

Answer: Employing an ensemble approach that leverages the strengths of different calling algorithms is a recognized best practice.

- Action: Configure and run at least two complementary variant callers (e.g., one haplotype-based, one probabilistic). Use a rigorous intersection-and-rescue strategy.

- Protocol for Ensemble Calling:

- Parallel Calling: Run GATK HaplotypeCaller (sensitive to indels in complex loci) and DeepVariant (strong performance in low-complexity regions) in parallel on the same BAM file.

- Intersection: Use

bcftools isecto obtain high-confidence calls present in both sets. - Rescue: For suboptimal regions defined by your BED file, rescue calls unique to either caller that pass relaxed quality thresholds (e.g., VQSOD > 0 for GATK, QUAL > 15 for DeepVariant).

- Annotation & Curation: Annotate all rescued variants with their caller-of-origin and manually inspect in IGV for supporting read evidence.

Data Presentation

Table 1: Comparative Performance of Variant Callers in Suboptimal Regions (Simulated Data)

| Caller | Algorithm Type | Sensitivity in Low-Coverage (<30x) Regions | Sensitivity in High-GC (>65%) Regions | Recommended Use Case in Ensemble |

|---|---|---|---|---|

| GATK HaplotypeCaller | Haplotype-based | 85.2% | 78.7% | Primary caller for standard regions; indel sensitivity. |

| DeepVariant | Deep Learning | 89.5% | 88.1% | Primary caller for suboptimal regions; complex loci. |

| FreeBayes | Probabilistic (Bayesian) | 82.1% | 80.5% | Rescue caller for low-frequency variants. |

| VarDict | Amplicon-based | 87.3% | 76.9% | Rescue caller for exon edges and difficult-to-map ends. |

Table 2: Impact of Tiered Filtering on VUS Recovery in Homologous Regions

| Filtering Strategy | Variants Called (Whole Exome) | Additional VUS Recovered in Suboptimal Regions | False Positive Rate (FP/kmb) in Rescued Set |

|---|---|---|---|

| Standard (MAPQ≥30) | 22,450 | Baseline (0) | 0.5 |

| Tiered (MAPQ≥10 in subopt) | 22,617 | +167 | 3.2 |

| Tiered + Ensemble Rescue | 22,605 | +155 | 1.8 |

Experimental Protocols

Protocol: Creating a Suboptimal Regions BED File for Tiered Analysis

- Download Source Files: Obtain the ENCODE unified blacklist (DAC/Snyder), segmental duplications, and self-chain tracks from the UCSC Table Browser (assembly: hg38).

- Merge and Sort: Use

bedtools mergeto combine all tracks into a unified BED file. Sort withbedtools sort. - Intersect with Target: Intersect the unified file with your WES target capture BED file using

bedtools intersectto retain only suboptimal regions within your area of interest. - Generate Complement: Create the "optimal regions" BED file using

bedtools complement.

Protocol: IGV Visualization for Manual VUS Curation

- Load Data: In IGV, load the reference genome (hg38), the sorted BAM file, and the VCF containing rescued VUS calls.

- Navigate: Enter the genomic coordinate of a rescued VUS in the search bar.

- Inspect: Examine read alignment. Pay special attention to soft-clipping, strand bias, and the presence of reads in the rescued call that were filtered out in the standard view (adjust alignment coloring to show MAPQ or BQ).

- Compare: Overlay tracks from the standard-filtered VCF and the rescued VCF to confirm the variant is unique to the rescue set.

- Document: Record visual evidence and decision rationale for each curated VUS.

Mandatory Visualizations

The Scientist's Toolkit

Table 3: Research Reagent & Tool Solutions for Suboptimal Region Analysis

| Item | Function/Benefit |

|---|---|

| UMI Kits (e.g., IDT Duplex Seq) | Attaches unique molecular identifiers to DNA fragments pre-PCR, enabling accurate error correction and deduplication to recover true low-frequency variants. |

| Extended Target Capture Probes | Probes designed with extended tiling into flanking intronic/low-complexity regions improve hybridization and coverage at exon edges. |

| GATK Best Practices Bundle | Provides curated reference files, known variant databases, and optimized workflows for germline/somatic variant discovery. |

| DeepVariant Docker Image | A deep learning-based variant caller that excels in calling variants in difficult regions without extensive manual tuning. |

| IGV (Integrative Genomics Viewer) | Critical desktop tool for the manual visualization and validation of alignment and variant calls in specific genomic loci. |

| bedtools Suite | Indispensable for manipulating genomic intervals (BED files), enabling operations like intersection, merging, and complement for tiered analysis. |

| bcftools | Provides robust command-line utilities for filtering, merging, comparing, and annotating VCF files, essential for ensemble strategies. |

From Problem to Solution: A Step-by-Step Framework for Diagnosing and Optimizing Low-Coverage WES Data

Troubleshooting Guides & FAQs

Q1: Why do I have persistent low-coverage regions in my Whole Exome Sequencing (WES) data? A: Persistent low-coverage regions in WES are typically caused by a combination of technical and biological factors. These regions hinder accurate Variant of Uncertain Significance (VUS) calling, a critical challenge in diagnostic and research settings.

| Primary Cause Category | Specific Factors | Typical Impact on Coverage (Depth) |

|---|---|---|

| Wet-Lab & Capture | GC-rich or AT-rich sequences, repetitive elements, inefficient probe design/hybridization. | <20x |

| Sequencing | Low sequencing output, poor cluster generation, instrument error. | Consistently low across all samples. |

| Sample & Biology | Degraded DNA, low input, copy number variations (deletions), high levels of polymorphism. | <30x in specific genomic contexts. |

| Alignment | Poor mapping quality for complex or homologous regions. | Unmapped or low-MAPQ reads. |

Q2: How do I distinguish a technical artifact from a true biological deletion? A: Follow this systematic differential diagnosis protocol.

- Step 1: Multi-Sample Check. Compare coverage files (e.g., from

samtools depth) across multiple samples run in the same batch. A region low in only one sample suggests a biological cause (e.g., deletion) or sample-specific issue. A region low in all samples indicates a persistent technical/design flaw. - Step 2: Quality Metrics. For a suspect region in a single sample, check:

- Mapping Quality (MAPQ): Use

samtools viewto inspect reads in the region. Low MAPQ scores suggest alignment ambiguity. - Read Pair Information: Look for split reads or abnormally large insert sizes, which may indicate a structural variant.

- Mapping Quality (MAPQ): Use

- Step 3: Orthogonal Validation. Confirm a suspected biological deletion using an alternative method (e.g., qPCR, MLPA, or array CGH).

Q3: What is the step-by-step workflow to identify and annotate these regions for my VUS research thesis? A: Implement this bioinformatics pipeline.

Experimental Protocol: Diagnostic Pipeline for Low-Coverage Region Analysis

- Coverage Calculation: Generate a per-base depth file using

samtools depth -a your_sample.bam > sample.depth. - Region Identification: Use

bedtoolsto find regions with depth below your threshold (e.g., 20x). Example:awk '$3 < 20' sample.depth | bedtools merge -i - > low_cov_regions.bed. - Annotation: Annotate

low_cov_regions.bedusingbedtools intersectwith databases such as:- Capture Kit BED File: To see if the region is even targeted.

- Gene Annotation (e.g., RefSeq): To identify affected genes and exons.

- Public Low-Coverage Databases: Like gnomAD (v4.0) coverage plots or the NCBI Sequence Read Archive (SRA) to assess if the region is commonly problematic.

- Prioritization for VUS Research: Cross-reference your list with VUS calls. A VUS in a confirmed, persistent low-coverage region requires orthogonal validation before any conclusion can be drawn, which is a crucial caveat for your thesis.

Q4: What tools and resources are essential for this analysis? A: The following toolkit is required.

Research Reagent & Computational Solutions

| Tool/Resource | Function | Key Application in Workflow |

|---|---|---|

| Samtools | Manipulate and analyze SAM/BAM files. | Calculate depth (depth), view alignments (view), index files. |

| BEDTools | Perform genomic arithmetic on interval files. | Merge, intersect, and annotate low-coverage BED files. |

| IGV (Integrative Genomics Viewer) | Visualize alignments interactively. | Manually inspect read alignment in problematic regions. |

| Capture Kit BED File | Defines genomic regions targeted by the assay. | Determine if low coverage is on-target or off-target. |

| gnomAD Coverage Browser | Public resource of aggregated sequencing coverage. | Benchmark your data against population-level coverage. |

| UCSC Genome Browser / Ensembl | Genomic annotation databases. | Annotate regions with gene, exon, and known genomic features. |

Visualization: Diagnostic Workflow

Title: Systematic Low-Coverage Region Analysis Workflow

Title: Impact of Low Coverage on VUS Interpretation

Technical Support Center

Troubleshooting Guides & FAQs

Q1: Our automated QC system is not flagging samples with coverage below the threshold in a known critical exon (e.g., BRCA1 exon 11). What are the primary causes? A: This is typically a configuration or data pipeline issue.

- Check BED File Alignment: Verify that the coordinates of your critical exons in the QC system's target BED file exactly match the reference build (GRCh37 vs. GRCh38) used in your alignment pipeline. A mismatch of even one base will cause the system to query the wrong genomic region.

- Verify Threshold Logic: Review the alerting script's logic. Ensure it is correctly evaluating the average or minimum coverage for the entire exon region against the threshold. A common error is checking only a single nucleotide position.

- Inspect Input Data: Confirm that the coverage file (e.g., from

mosdepthor GATKDepthOfCoverage) is being generated correctly and is accessible to the QC system. Corrupted or empty coverage files will result in silent failures.

Q2: We are receiving excessive alerts for the same exon across multiple samples, suggesting a systematic issue. How should we diagnose this? A: Follow this diagnostic protocol to isolate the problem.

Diagnostic Protocol:

- Step 1 - Primer/Probe Check: Retrieve the specific probe or primer sequences for the problematic exon from your hybridization capture kit or amplicon panel manufacturer's documentation.

- Step 2 - In-silico PCR/Alignment: Use a tool like

BLATorBowtie2to align these sequences to the human reference genome. Check for:- Specificity: Do the sequences map uniquely to the intended target?

- Sequence Variants: Does the primer/probe sequence contain a common SNP (dbSNP) at the 3' end? This can severely reduce capture/amplification efficiency.

- GC Content: Calculate the GC content of the exon and its flanking regions. Regions with GC content >65% or <30% are prone to low coverage.

Q3: After identifying a problematic critical exon, what wet-lab validation steps are required before proceeding with patient VUS interpretation? A: You must confirm the variant presence and coverage drop via an orthogonal method.

- Method: Sanger Sequencing.

- Protocol:

- Design primers flanking the low-coverage exon, ensuring they are placed in well-covered regions.

- Amplify the target from the original patient gDNA using a high-fidelity polymerase.

- Purify the PCR product and perform bidirectional Sanger sequencing.

- Analyze chromatograms using software (e.g., Sequencher) and align to the reference sequence to confirm both the depth of coverage and the presence/absence of the VUS.

Q4: How do we integrate automated exon-level QC flags into our existing VUS research pipeline without disrupting workflow? A: Implement a pre-interpretation filter in your analysis pipeline.

- Solution: Create a "QC Flag" column in your master variant call file (VCF) or research spreadsheet. Use a script (e.g., in Python or R) to annotate any variant that falls within an exon flagged for low coverage (e.g., <20x). This allows researchers to immediately triage these VUS for required follow-up validation before investing time in literature and database searches.

Data Summary Table: Common Low-Coverage Exons in Cancer Genes (Example)

| Gene | Exon | Common Cause | Suggested Action |

|---|---|---|---|

| BRCA1 | Exon 11 | High GC content (~65%), large size | Optimize PCR with GC-enhancer; confirm via Sanger. |

| MLH1 | Exon 19 | Homologous sequence in pseudogene | Use PMS2-specific capture probes; redesign primers. |

| TP53 | Exon 1 | High GC promoter region | Use fragmentation-based library prep over enzymatic. |

| RYR2 | Multiple | High sequence homology between exons | Employ long-read sequencing for validation. |

Experimental Protocol: Validating Coverage Failure via ddPCR

Title: Absolute Quantification of Target Copy Number to Diagnose Capture Failure. Objective: To determine if low NGS coverage in a critical exon is due to a primer-binding site SNP or true copy number variation. Materials:

- Patient genomic DNA.

- ddPCR Supermix for Probes (no dUTP).

- FAM-labeled probe assay for the target critical exon.

- HEX-labeled probe assay for a reference gene (e.g., RNase P).

- DG8 Cartridges and QX200 Droplet Generator.

- QX200 Droplet Reader and analyzer software. Method:

- Prepare a 20µL reaction mix per sample: 10µL ddPCR Supermix, 1µL of each primer/probe assay (target and reference), and ~20ng of gDNA.

- Generate droplets using the QX200 Droplet Generator.

- Transfer droplets to a 96-well PCR plate and perform PCR amplification.

- Read the plate on the QX200 Droplet Reader.

- Analyze data using QuantaSoft software. The target/reference ratio will indicate if the region is truly deleted/duplicated (ratio ~0.5 or ~1.5) or if the sequence is present but failed to capture (ratio ~1.0).

Research Reagent Solutions Toolkit

| Item | Function in Coverage QC |

|---|---|

| Hybridization Capture Kit (e.g., xGen Exome Research Panel v2) | Defines the target regions; probe design directly impacts coverage uniformity. |

| GC Enhancer Additives (e.g., Q5 GC Enhancer) | Improves polymerase processivity in high-GC regions during library amplification or validation PCR. |

| Molecular-Barcoded Adapters (UDI) | Reduces index hopping and enables accurate pooling of samples for sequencing without cross-contamination. |

| Droplet Digital PCR (ddPCR) Probe Assays | Provides absolute, sequence-specific quantification of a genomic target to validate NGS findings. |

| Sanger Sequencing Primers | Gold-standard for orthogonal validation of variants in regions flagged by automated QC. |

Workflow Diagram

Diagram Title: Automated QC and Alert Workflow for Exon Coverage

Pathway Diagram

Diagram Title: Causes and Impacts of Exon Coverage Failure

Technical Support Center

Troubleshooting Guide

Issue 1: High rate of false-positive variant calls in low-coverage regions.

- Problem: Standard hard filters (e.g., DP<10, QD<2) are removing all variants in marginal coverage areas, but a less stringent filter yields numerous false positives.

- Diagnosis: The balance between sensitivity and specificity is lost. Uniform filtering does not account for coverage variability across the exome.

- Solution: Implement tiered, locus-specific filtering. Adjust thresholds dynamically based on the local coverage profile and sequence context.

- Actionable Protocol:

- Generate a per-base depth file using

samtools depth. - Define coverage tiers (e.g., 10-20x, 5-10x, <5x).

- Apply filter thresholds specific to each tier.

- For regions <10x, supplement with allele-specific metrics like allele balance and strand bias.

- Generate a per-base depth file using

- Actionable Protocol:

Issue 2: VUS classification is inconsistent for variants in poorly covered exons.

- Problem: Annotators (e.g., ClinVar, gnomAD) lack population frequency data for variants in regions rarely covered sufficiently in large cohorts, leading to over-classification as VUS.

- Diagnosis: Standard annotation pipelines fail to flag variants with "absent from databases due to low coverage" as a distinct category.

- Solution: Augment annotation with a custom track indicating regional callability and database coverage.

- Actionable Protocol:

- Use

bedtoolsto intersect your target BED file with public cohort callability BED files (e.g., gnomAD genome callability regions). - Annotate each variant with a new field,

DB_COVERAGE_STATUS, with values:"HighConfidenceRegion","MarginalRegion","NoDataRegion". - Re-classify VUS in

"NoDataRegion"as"VUS-LowCoverage"for prioritized follow-up validation.

- Use

- Actionable Protocol:

Issue 3: Poor concordance between duplicate samples sequenced at different coverage depths.

- Problem: Variants called in well-covered regions of Sample A are missing in the low-coverage regions of Sample B, complicating cohort analysis.

- Diagnosis: The joint genotyping step in pipelines like GATK is highly sensitive to individual sample depth.

- Solution: Apply variant quality score recalibration (VQSR) using training resources that include low-coverage samples, or switch to a genotype-refinement tool.

- Actionable Protocol:

- For VQSR, curate a truth set that includes variants from platforms insensitive to coverage (e.g., microarray-derived genotypes).

- Alternatively, use

GATK CalculateGenotypePosteriorswith a panel of known variants to de novo refine low-coverage genotype likelihoods. - Filter final genotypes on the adjusted posterior probability.

- Actionable Protocol:

Frequently Asked Questions (FAQs)

Q1: What is the minimum depth threshold I should use for clinical research in marginal regions? A: There is no universal minimum. For discovery research, a depth of 5-8x may be acceptable when combined with stringent genotype quality (GQ>20) and supporting reads from both strands. For clinical applications, any region with consistent coverage <20x should be flagged for orthogonal validation. See Table 1 for tiered recommendations.

Q2: How can I improve annotation for variants in regions where gnomAD shows no data? A: First, check if the region is present in the gnomAD callability mask. If it is not callable, annotate the variant accordingly. Then, look for the variant in more specialized, often smaller, databases that use different capture kits or sequencing technologies which might cover that region. Aggregate these into a custom annotation database.

Q3: Are there specific tools for joint calling of cohorts with highly variable coverage?

A: Yes. While GATK's HaplotypeCaller in GVCF mode is standard, consider tools like DeepVariant, which uses deep learning and may handle variable coverage more robustly. For a cohort mix of WES and WGS, joint calling all samples together can improve low-coverage WES genotyping by leveraging information from high-coverage WGS samples at the same loci.

Q4: What is the most effective wet-lab solution to resolve VUS in low-coverage regions? A: Targeted amplicon sequencing (Sanger or high-depth NGS) is the gold standard for orthogonal validation. Design primers flanking the VUS and sequence the specific region to achieve >100x coverage, confirming both the variant's presence and its zygosity.

Data Presentation

Table 1: Tiered Filtering Strategy for Variable Coverage Regions

| Coverage Tier (x) | Recommended Hard Filters | Additional Contextual Filters | Suggested Action |

|---|---|---|---|

| ≥ 30 | QD < 2.0, FS > 60.0, DP < 10 | SOR > 3.0, MQ < 40.0 | Standard analysis |

| 20 - 29 | QD < 1.5, FS > 45.0, DP < 8 | ReadPosRankSum < -8.0 | Proceed with caution |

| 10 - 19 | QD < 1.0, FS > 30.0, DP < 5 | AB > 0.8 or < 0.2, SB > 0.1 | Flag for validation |

| 5 - 9 | GQ < 20, DP < 3 | Must have reads on both strands | Mandatory validation |

| < 5 | Consider as "No Call" | N/A | Require orthogonal method |

Table 2: Impact of Annotation Augmentation on VUS Classification (Hypothetical Cohort: n=1000)

| Annotation Pipeline | Total VUS | VUS in Low-Cov Regions | VUS Re-classified as "Low-Coverage Artifact" | Actionable VUS Post-Review |

|---|---|---|---|---|

| Standard (VEP + ClinVar) | 1200 | 350 (29.2%) | 0 | 1200 |

| Augmented (with Coverage Context) | 1200 | 350 (29.2%) | 220 | 980 |

Experimental Protocols

Protocol 1: Generating a Callability Mask and Annotating Database Coverage Status

- Inputs: Your target capture BED file (

targets.bed), gnomAD genome callability BED (gnomad.callable.bed). - Intersection: Use

bedtools intersect:bedtools intersect -a targets.bed -b gnomad.callable.bed -wa -u > high_conf_regions.bed - Subtraction: Find low-confidence targets:

bedtools subtract -a targets.bed -b high_conf_regions.bed > marginal_or_nodata.bed - Annotation: Add the region confidence as an INFO field to your VCF using

bcftools annotatewith a custom file mapping genomic regions toDB_COVERAGE_STATUS.

Protocol 2: Orthogonal Validation by Targeted Amplicon Sequencing

- Primer Design: Using the variant coordinates, design PCR primers (~200-300bp amplicon) with tools like Primer3.

- PCR Amplification: Perform standard PCR on the original genomic DNA.

- Purification: Clean PCR products with an enzymatic clean-up kit.

- Sequencing: Prepare Sanger sequencing reactions or an NGS library for the amplicons.

- Analysis: Align sequences to the reference genome and call variants at the target position. Compare genotype to original WES call.

Mandatory Visualization

Title: Tiered Analysis Workflow for Marginal Coverage

Title: VUS Reclassification Logic with Coverage Context

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Low-Coverage Analysis |

|---|---|

| Hybridization Capture Kits (e.g., IDT xGen, Twist Bioscience) | Define the initial regions of interest. Newer kits with improved uniformity can reduce marginal coverage regions. |

| UMI Adapters (Unique Molecular Identifiers) | Allow bioinformatic correction of PCR duplicates and sequencing errors, improving variant call confidence in low-depth data. |

| Targeted Amplicon Sequencing Kits (e.g., Illumina AmpliSeq) | Enable high-depth, orthogonal validation of specific VUS calls from low-coverage WES regions. |

| Genomic DNA Reference Standards (e.g., GIAB, Seracare) | Provide known variant calls across difficult-to-sequence regions for benchmarking pipeline sensitivity/specificity. |

| High-Fidelity PCR Master Mix | Essential for generating clean amplicons for validation sequencing, minimizing polymerase-induced artifacts. |

| Custom gnomAD Callability BED Files | Provide the necessary resource to annotate whether a variant is in a region callable by major public databases. |

Technical Support Center

Troubleshooting Guides & FAQs

FAQ 1: My cardiomyopathy panel (e.g., Illumina TruSight Cardio) shows persistent low coverage (<20x) in key exons of TTN and MYBPC3. What are the primary causes and solutions?

- Answer: This is a known issue due to high GC content and repetitive sequences. Implement a multi-step pipeline:

- Wet-Lab Optimization: Increase PCR cycles from 10 to 12-14 in the library enrichment step. Use a polymerase master mix optimized for high-GC regions (e.g., KAPA HiFi HotStart ReadyMix).

- Bioinformatic Augmentation: Post-sequencing, integrate a secondary, long-read capture (e.g., Oxford Nanopore) for TTN's notorious exons. Use the long-read data to fill gaps and verify alignments in short-read data.

- Analysis Adjustment: In your variant caller (e.g., GATK HaplotypeCaller), disable down-sampling and set

--max-reads-per-alignment-startto a high value (e.g., 200) to ensure all coverage is considered.

FAQ 2: How do I systematically validate a Variant of Uncertain Significance (VUS) called in a low-coverage region of MYH7?

- Answer: Follow this orthogonal validation protocol:

- PCR & Sanger Sequencing: Design primers flanking the low-coverage exon from the MYH7 gene. Re-amplify from the original patient DNA.

- Droplet Digital PCR (ddPCR): If the VUS is a known SNP, design a specific probe assay for absolute quantification of the allele fraction in the patient and control samples.

- Cross-Platform Confirmation: Process the same sample through a different NGS chemistry (e.g., IDT xGen panels on an Illumina platform vs. hybridization capture on a Complete Genomics/MGI platform).